This article is part of our AI Knowledge Hub, created with Pendo. For similar articles and even more free AI resources, visit the AI Knowledge Hub now.

At Lightful, we run a capacity-building programme for nonprofits using a combination of human-powered learning and technology. As we teach nonprofits how to best use digital tools to reach more people and raise more money, it was natural for us to be interested in the emerging area of generative AI technology and to throw ourselves into learning about it – after all, you can’t teach others what you don’t know yourself.

We quickly realised that there was so much to learn, that working ad-hoc around other priorities would not do this area justice. So we assembled an internal ‘AI Squad’ composed of designers, digital coaches, engineers and product experts to focus on learning about generative AIs, and how to integrate them into our products and programmes.

Prioritising AI work

At this point, you might wonder how that was signed off and the roadmap adapted? Well, one of our product experts in the team was the Managing Director for Learning, so we had senior buy-in from the get go. In fact, earlier in the year, we added a new company OKR – have completed one pilot on an AI concept and received positive feedback from beta users – so our foray into the world of AI was very much a business priority, and as such, other plans were deprioritised on our roadmap to make space for this.

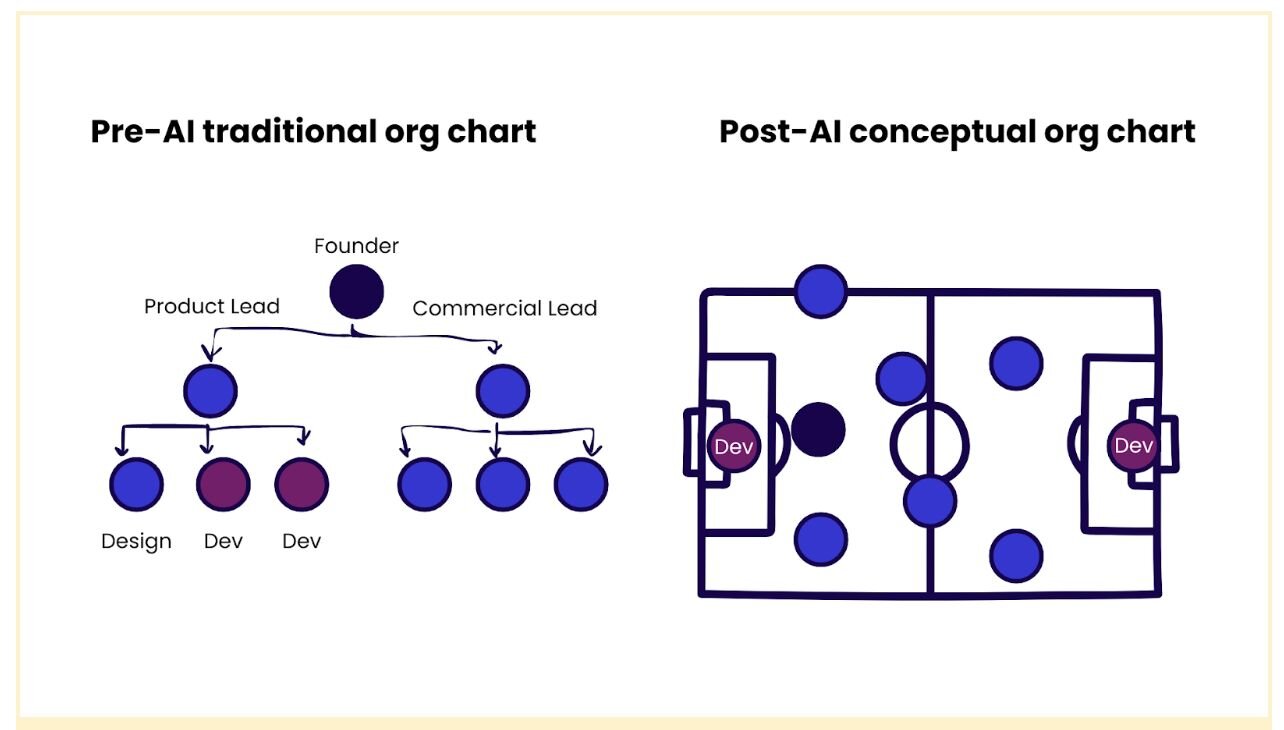

We are also a slightly unusual team, in that we don’t have a formal product manager role, but different people act as product owners related to our in-house technology products (like our social media management platform), and third-party tools we build on (like our Learning Management System). From our engineering team of five developers, two were dedicated full-time to AI.

We started our product development premise from first principles – what problems are charities we work with facing, and can AI tools solve those problems?

Building a product to integrate with generative AI is not like other products we integrate with. On one level, it is similar to how we integrate with Twitter or Facebook in our social media management platform, in that we send some information via an API call and then get some other information back. The key difference, however, is that whereas we know what all the possible responses might be from a social media API (e.g. a success or fail response), the response from the GPT-4 API could be anything. And we might not know if it is a ‘good’ or ‘bad’ response to whatever question we asked it.

The fact that a generative AI tool can answer ‘anything’ also leads to the temptation to think ‘What could we use this for’? That, however, is an approach that should be resisted. You must first start with the problem, and work out a solution, not the other way around.

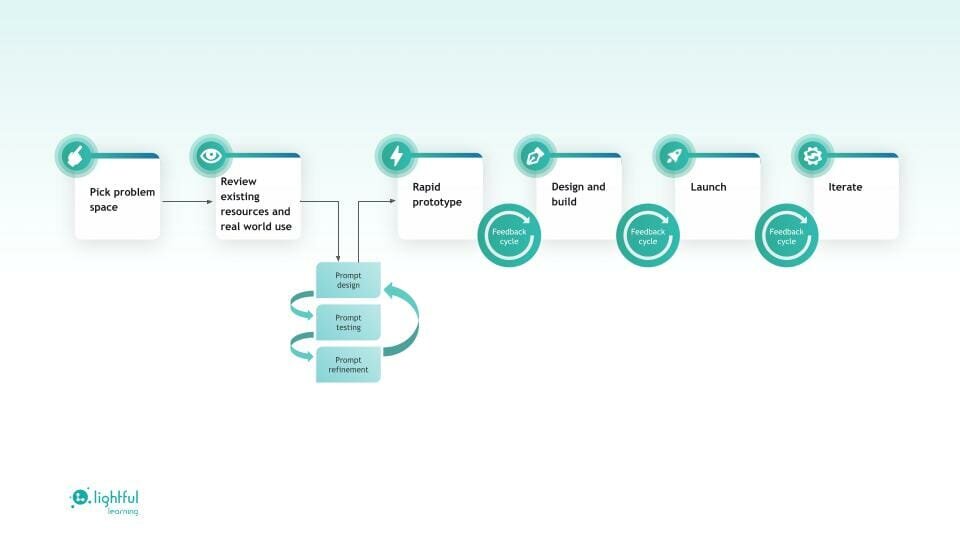

Our approach was to map out all of the challenges we see charities face and narrow down the problems using the opportunity solution tree approach, before starting to see how we could use AI to solve those problems. Once we had a problem space, we could use the available AI tools to see if they worked. This involved adding a new step in our regular product development cycle – prompt design.

Developing with AI and agility

As we built AI prototypes to integrate with our existing technologies, we encountered various challenges, three of which stood out:

- Adapting to rapidly evolving AI technologies: During the initial prototyping of our social media posts AI-feedback tool, GPT-4 was released. This new model significantly enhanced responses and feedback capabilities, but the API response was slower. Finding the balance between fast responses and not making users wait, versus giving better responses (and measuring what ‘better’ even means) is an ongoing challenge.

- Understanding and establishing best practices: Working with cutting-edge technology often means navigating uncharted territory, and so working patterns were scarce. When best practices emerged, we incorporated them into our iterative development cycles, but this was mostly reactive. OpenAI's support in disseminating best practices has been invaluable in steering us in the right direction.

- Overcoming the steep learning curve of AI and related technologies: The pace of change in AI has been unrelenting this year, with new features, capabilities and tooling seemingly released every week. Although developers no longer need to be experts in machine learning due to the introduction of abstraction layers, acquiring foundational knowledge about underlying AI concepts, and moving quickly as things changed, has been essential.

Designing with agile practices for AI development

One key challenge in designing for AI is that there are no visible rules on how an AI functions, unlike a traditional interactive system which has predictable responses based on known rules. This means that, in our design activities, we required a great deal of testing to help us create a ‘mental model’ of how the AI works given particular prompts. This mental model acted as a kind of collective knowledge in the AI squad (and an epic MIRO board) that we built upon through sharing the results of our tests and the assumptions about what types of prompts and other inputs generate what type of results.

We used a number of collaborative techniques to enhance our ability to imagine possibilities, tailor products to users’ needs, and iterate rapidly on prototypes, including:

- User story mapping: Breaking down ideas into manageable chunks, illustrating scenarios and use cases during ideation.

- Human-centred design: We immersed ourselves in users' perspectives to understand their key challenges and goals, and determine whether we were actually solving the problems we set out to

- Continuously test prompts: We encouraged all team members to test prompts to optimise AI outputs and benefit from each person’s perspective. Because of how prompts work, different phraseology can result in different outcomes, and each person’s slightly different method of communicating meant we could quickly generate a wide variety of prompts.

- Regular cross-collaborative feedback loops: We had daily standups to benefit from feedback from a variety of of perspectives, which enabled us to quickly highlight and address problems and ideate on new opportunities

- Understanding the constraints of AI: We focussed on areas where AI could offer suggestions and feedback rather than wholesale content generation, as this was where we found its application the strongest.

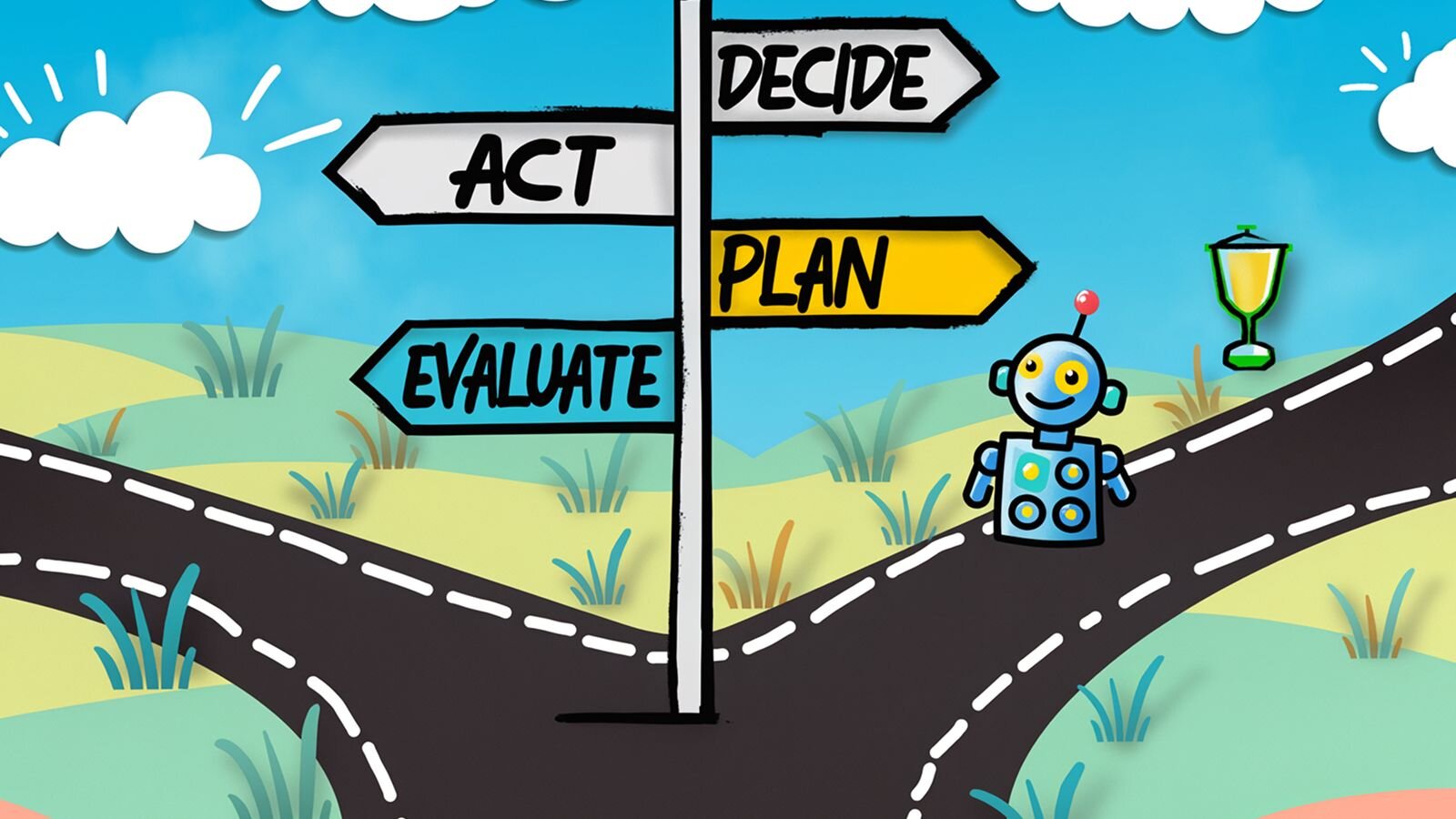

- Keeping the human-in-the-loop: We found that AI worked best as a collaborative tool for our users rather than as one to replace them. This is especially true given that we are a learning company – our AI applications were designed to prompt the user to think. In this way, our applications acted as a kind of cognitive loop moving from user to AI, and back again.

How the AI squad process helped us create useful features

By working through this process over a period of months, the team were able to test, prototype and build multiple features. The first – and most used so far – was a new addition to the Lightful Social Platform. With the help of GPT-4, when content is first drafted by the participant, they can click an ‘AI Feedback’ button to get three specific alternative suggestions and insights on how they might improve their social post. The insights explain the principles behind each suggestion, so people can learn best practice theory and how to apply it, instead of just following instructions without understanding the reasoning.

By deliberately sequencing the workflow in this way, Lightful helps staff and volunteers at nonprofits to be supported by the use of AI to remain authentic, empathetic and logical – the key basis of building trust.

What’s next for Lightful and AI

The AI squad approach has helped us massively accelerate our learning, as well as spreading the knowledge across a wider number of people and teams. Now that we have built our institutional knowledge and experience, our process is adapting. We no longer need to meet every day, as we have a large number of AI projects in progress across different teams in their regular workflows. We even have an AI product roadmap that includes plans to productise the various prototypes we have tested, as well as key problem spaces that we will focus on in a user experience-led discovery process.

We also have longer-term plans for applying AI to more of our internal processes, but the priority for now is to deliver better outcomes for the charities and nonprofits we serve. By embracing collaboration, agility, and a problem-first approach, we plan to create more powerful AI-powered solutions that empower nonprofit professionals and contribute to their mission-driven work.