The vast potential of AI in healthcare

AI's applications within healthcare are already astounding. These are some of the areas where AI can augment the entire workflow

Precision diagnostics: By analyzing vast amounts of medical data, including imaging scans, lab results, and genetic profiles, AI algorithms are enabling earlier and more accurate detection of diseases (He et al., 2019). Google Health's work in mammography is a prime example. Its AI model analyzes mammograms, potentially improving breast cancer detection rates and reducing false positives and false negatives. This technology could make a significant difference in early cancer detection and has the potential to expand access to crucial screening, especially in underserved areas.

Drug development and discovery: AI can streamline the drug development process, helping researchers sift through countless molecular combinations to find potential drug candidates (Davenport & Kalakota, 2019). This could lead to faster breakthroughs in treatments for diseases that currently lack effective options. For instance, Exscientia is a pioneering company using AI to design new drug molecules. its platform analyzes vast datasets of biological information to predict which molecules are most likely to be successful in treating specific diseases, accelerating the drug discovery process.

Personalized treatment plans: AI can help doctors tailor treatment recommendations with unprecedented precision (Rajkomar, Dean, & Kohane, 2019). Rather than a one-size-fits-all approach, imagine treatments based on your unique genetic makeup, medical history, and lifestyle. This could mean more effective therapies and fewer side effects. A leading example is Tempus, a company that combines AI-powered analysis of genomic data with clinical expertise to help oncologists identify targeted therapies and matching clinical trials for cancer patients.

Virtual assistants and chatbots: Already, AI-powered virtual assistants and chatbots provide round-the-clock patient support. They field basic questions, help triage symptoms, and make scheduling appointments easier – a huge relief for overburdened healthcare systems and patients struggling to navigate complex health information (Davenport & Kalakota, 2019). A prime example is Ada Health, whose AI-powered symptom checker allows users to input their symptoms and receive potential diagnoses and guidance on the next steps.

Robot-assisted surgery: Robots guided by AI algorithms perform surgeries with incredible precision, minimizing errors (He et al., 2019). This translates to smaller incisions, reduced recovery times, and better outcomes for patients facing daunting procedures. A prime example is the da Vinci Surgical System by Intuitive Surgical. This AI-assisted system provides surgeons with enhanced visualization and control, allowing them to perform complex procedures with a minimally invasive approach.

Administrative workflow optimization: AI has the potential to streamline countless administrative tasks in healthcare (Davenport & Kalakota, 2019). From patient record management to resource allocation, AI can reduce bureaucracy, freeing up healthcare workers to focus on what matters most – caring for patients. For example, many hospitals are now using AI-powered tools by companies like Qventus to predict patient flow and optimize bed allocation. This helps avoid bottlenecks, reduces wait times, and improves the overall efficiency of hospital operations..

The unrelenting imperative: Safety and ethics

While AI's potential benefits ignite excitement, it's imperative to address the potential risks and ethical dilemmas that go hand-in-hand with its use (Obermeyer & Emanuel, 2016). A focus on safety must be the guiding star in AI's integration into the complex world of medicine:

- Algorithmic bias: AI algorithms are only as objective as the data they are fed (Obermeyer & Emanuel, 2016). If we use biased or incomplete datasets, we run the risk of these systems perpetuating and even amplifying existing healthcare disparities.

- Data privacy and security: Developing AI requires massive amounts of sensitive patient information (He et al., 2019). Data breaches cannot just be an afterthought; stringent measures for data privacy and security are non-negotiable. Otherwise, patients will understandably lose trust in the very system designed to help them.

- Explainability and transparency: Many AI algorithms, particularly deep learning systems, operate as "black boxes," making it hard to understand their decision-making processes (Rajkomar, Dean, & Kohane, 2019). This lack of transparency hinders clinicians' ability to fully adopt these tools and raises questions about potential errors or missed diagnoses.

- Accountability and liability: If a medical error occurs due to an AI system, who is responsible? This complex question goes beyond doctors and hospitals – we need clear accountability frameworks for AI developers as well (Rajkomar, Dean, & Kohane, 2019).

- Potential job displacement: The automation enabled by AI raises real concerns about job displacement for healthcare workers (Davenport & Kalakota, 2019). Strategies for retraining and redeployment must be a part of the conversation from the start, not a reaction to workforce disruptions.

A safety-centric framework for AI implementation

Successfully integrating AI into healthcare requires a proactive approach, with safety and ethics at its heart. Here's what that means in practice:

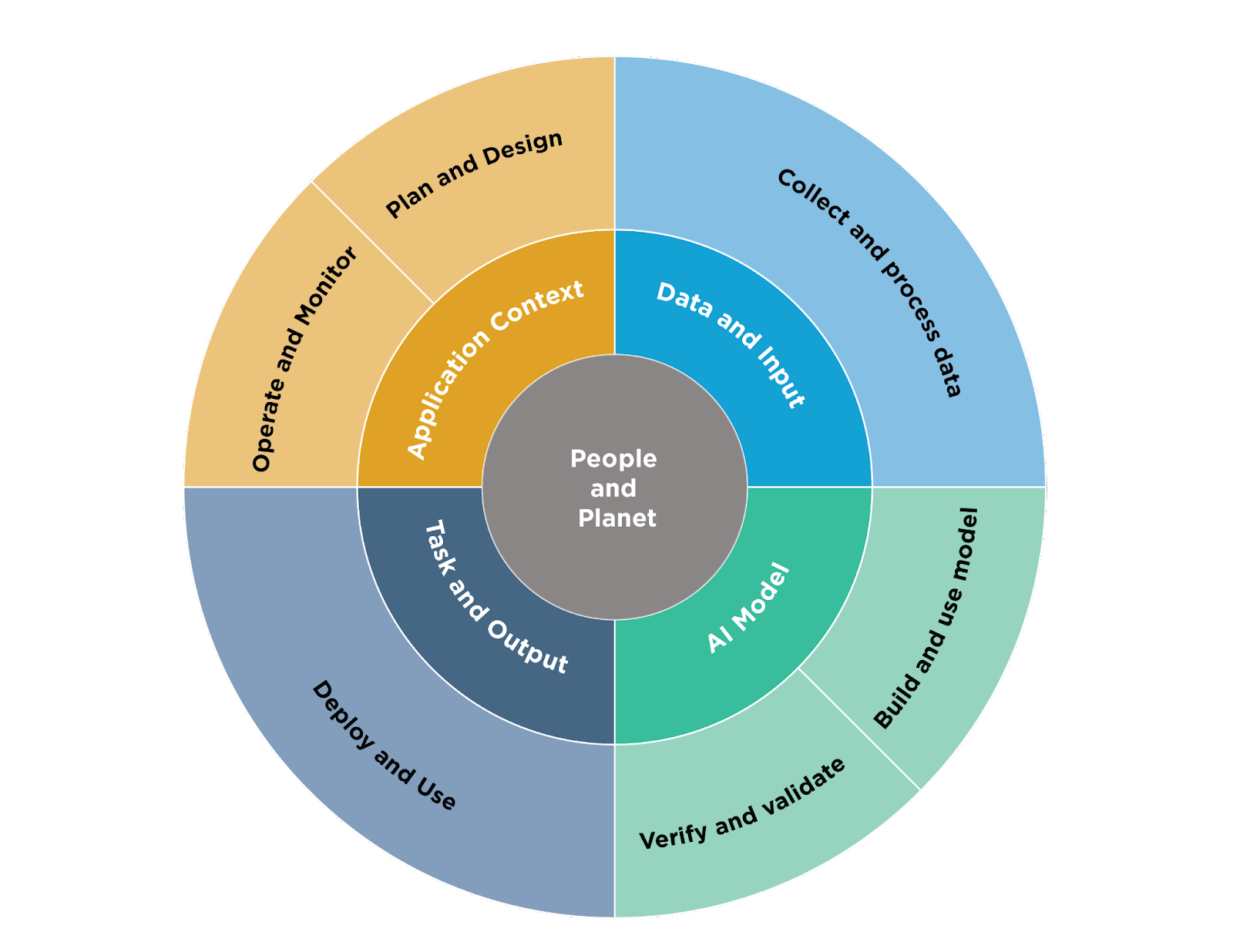

- Risk management: NIST (The US National Institute for Standards and Technology) has established a framework for AI risk management, which clearly defines the steps that need to be taken to address the risk methodically.

Image Source: NIST AI RMF

- Robust datasets: We need diverse, high-quality, and meticulously curated datasets to ensure representativeness and minimize the potential for bias (Kompa, Snoek, & Beam, 2021).

- Algorithmic transparency: Design AI systems with interpretable results whenever possible (Rajkomar, Dean, & Kohane, 2019). This allows doctors to understand the reasoning behind recommendations, empowering them to make informed decisions alongside the AI.

- Rigorous validation and testing: AI systems must undergo extensive and continuous validation in real-world clinical settings (He et al., 2019). It's about more than accuracy; it's about reliability under pressure.

- Human-AI collaboration: Rather than aiming for full automation, the future lies in empowering healthcare providers with AI tools (Davenport & Kalakota, 2019). Doctors and nurses, armed with insights from AI, remain the central decision-makers.

- Addressing data privacy: Stringent measures for data encryption, anonymization, and secure storage are paramount (He et al., 2019). Patients must have full transparency and control over how their data is used.

- Continuous monitoring and evaluation: Even deployed AI systems need constant monitoring for potential biases, unintended consequences, and areas for improvement (Kompa, Snoek, & Beam, 2021).

- Clear liability frameworks: We need a clear understanding of responsibility and liability when it comes to AI-related medical errors (Rajkomar, Dean, & Kohane, 2019). This should involve all stakeholders from the start.

- Evolving regulatory landscape: Regulatory agencies must work proactively alongside industry experts to establish guidelines and standards for the use of AI in healthcare, balancing safety and innovation (He et al., 2019).

- Emphasis on education and training: Healthcare providers of all levels need to be educated about both the capabilities and limitations of AI (Davenport & Kalakota, 2019). Building trust requires knowledge.

Beyond the technical: Ethical considerations

While the technical hurdles of ensuring AI safety are significant, we must equally address the complex ethical dilemmas that arise:

- Informed consent: Patients need to clearly understand how AI is being used in their care (Obermeyer & Emanuel, 2016). Informed consent must be a foundational principle.

- Addressing disparities: Mitigating potential biases in AI systems is about more than technical fixes; it's a healthcare justice issue (Obermeyer & Emanuel, 2016).

- Promoting fairness and justice: We must ensure that AI-powered healthcare tools are accessible to all patients, regardless of their background (Obermeyer & Emanuel, 2016).

- Upholding patient autonomy: It's vital that AI tools respect patient autonomy and allow for patient preferences to be prioritized in decision-making processes.

The transformative future: Realizing AI's full potential in healthcare

Artificial Intelligence has the potential to transform medicine, prevent disease, and improve patient outcomes worldwide. However, to ensure AI becomes a force for good, a safety-centric mindset must underlie every step of development and implementation.

By prioritizing ethics, transparency, and continuous evaluation, we can create a future where AI and healthcare professionals work in harmony. This collaboration holds the key to safer, more personalized, and more equitable healthcare for everyone.

Explore more great product management content by exploring our Content A-Z