In part one of two of this podcast series, we speak with experienced product expert Itamar Gilad to find out the benefits of implementing evidence-based product decisions, and ideas, and how this can enable teams to achieve key product goals.

Featured links

Featured Links: Follow Itamar on LinkedIn and Twitter | Itamar's website | 'Idea Prioritization with ICE and the Confidence Meter' piece | Sign up for Itamar's newsletter

Episode transcript

Randy Silver:

Okay, so you know, when you're feeling all out of sorts and your roadmap is a mess and you're chasing down bugs and the exec team are are flip flopping around.

Lily Smith:

Okay, Randy, I get the day. What's your point?

Randy Silver:

Well, that's exactly my point. Really? What's it the just today we're talking to Mr. Gilad, he's a product management coach. And we're gonna talk to him about the gift framework, which is a tool to use to help develop products and an evidence guided way.

Lily Smith:

That sounds both fancy and really useful. So let's get to it. The product experience is brought to you by mind the product.

Randy Silver:

Every week, we talk to the best product people from around the globe about how we can improve our practice, and build products that people love.

Lily Smith:

Because it mind the product.com to catch up on past episodes, and to discover an extensive library of great content and videos,

Randy Silver:

browse for free, or become a minor product member to unlock premium articles, unseen videos, AMA's roundtables, discount store conferences around the world training opportunities.

Lily Smith:

mining product also offers free product tank meetups in more than 200 cities, and less probably one. So it's lovely to welcome you on the podcast today.

Itamar Gilad:

Thank you very much for inviting me, really happy to be here.

Lily Smith:

So for listeners, it would be great if you could give us a real quick intro to who you are, and your background and product.

Itamar Gilad:

So I started out as an engineer, I worked at IBM back in the mid 90s, where we built one of the earliest broadband cable networks just shows you how old I am. And that was an actually an interesting project because I first time so how projects can be technically successful, but the company will just dish it decide and say we're not interested. So we failed on the business viability part of things that I moved on, to work as a product manager from the year 2000 in a number of startups and scale ups in Israel, where I'm from, and I worked for a large international, Microsoft. And finally, I worked at Google for a few years, mostly on Gmail, where I kind of own part of the product. And today since the last five or six years ago, I've been coaching, teaching, and writing about product management of a newsletter. And I created a few tools of my own for Product Management.

Lily Smith:

Amazing. Thank you for that intro. And it sounds like you've done kind of all the things and I'm looking forward to finding out all of the hot tips that you have for us as product people. But we're going to talk today about the jest framework that you've developed, would you give us like a real quick intro into what the test framework is and how it should be applied? Sure.

Itamar Gilad:

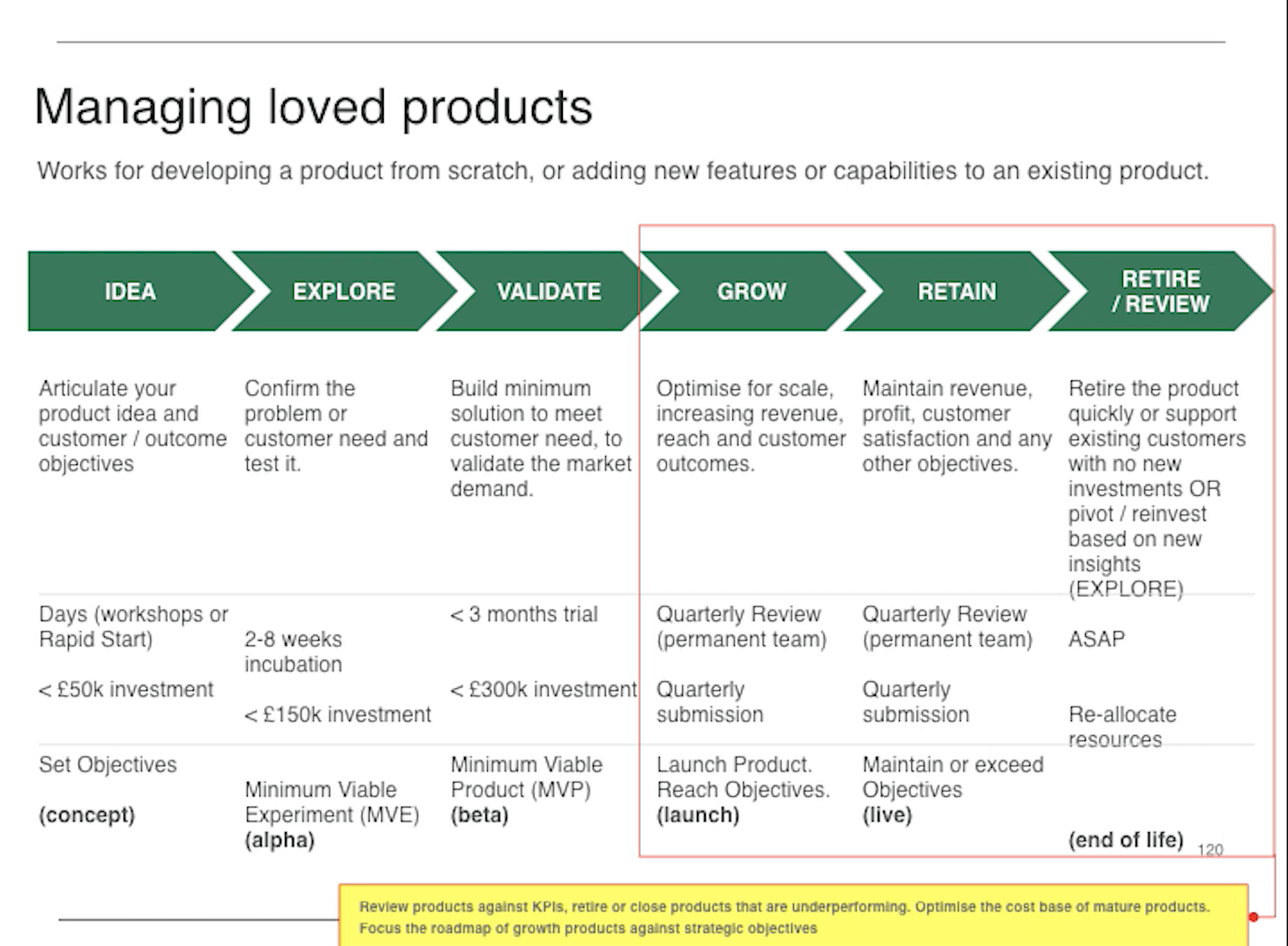

So it's a framework that is kind of inspired by what I experienced at Google. And it tries to help companies move to a more evidence guided mode of work. It's completely inspired by other much smarter people than me, people were we invented product discovery, or lean startup or design thinking. It's really based on the same principles. The framework itself has four layers, goals, ideas, steps and tasks. Goals is essentially about defining what we want to achieve. But in such a way that once we develop the product and discover it, we have a basis to compare against. So every time we evaluate an idea, we'll ask ourselves, does it move us towards the goal? Can we pivot it in some way to create even more impact? The ideas of themselves delay or five years is about evaluating ideas in a slightly more detached, less emotional way, not about opinions, not about our excitement or resentment of the idea, but more about does they say they're actually work. And then we want to actually validate our assumptions about ideas. And that's where the steps come in steps are just short activities or projects, where we test the ideas some more, develop it a little bit, test it and then regroup and look at the results. So build, measure, learn, etc. And the task layer is actually about connecting this to the reality of agile teams. So whether you use Kanban or Scrum or Scrumban doesn't matter. Just can work with your system today.

Randy Silver:

You make it sound really easy. So the question is, why do people need your help while your company's not using an evidence based approach these days? What what's wrong with them?

Itamar Gilad:

So I think part of the problem is we don't realise how bad We are actually detecting good ideas, the statistics are very clear. So people like chronicle Javi, and others have collected results of 1000s of 1000s of experiments. And at best best case scenario, one in three ideas actually works or creates any sort of positive measurable improvement. And in many cases, much lower than that could have worked for Airbnb and assurance statistics that 8% of experiments in some part of Airbnb actually showed any measurable improvement. And that's in my experience is pretty typical. So 10 15% is normal, which means have what you have right now in your roadmap in your backlog is the best one in three ideas is actually going to work, the rest is going to be waste. And we're, that's one part we're not very well aware of. And the other part, which is even sadder is the Tao turbo, we are determining which are the good ideas, and which are the bad ones. I'm speaking from experience as a product manager for many years, I was very confident about the ideas I was proposing and pushing forward. And in hindsight, I got it wrong a lot. And we have all sorts of cognitive biases and psychological mechanisms in our heads to convince ourselves that we can actually predict the future. And the most senior that we are, the more kind of determined we are that we actually do this. And this is our job to specify the future. But we can't unfortunately, and I think, partly we don't notice. And partly, we just feel that the right way to move forward is to be very deterministic, very determined, very, kind of sure that this is the one winning idea. And let us go for it. And then we launch an eight, right, which I think Jeff glottal said is the biggest lie in the industry. And that's why we need principles and frameworks and, and tools to help us kind of compensate not replace our judgement, but kind of supercharge it. And evidence I found is the most powerful tool to do that.

Lily Smith:

And so you tend to use the phrase evidence guided product development, is that different to data LED product development,

Itamar Gilad:

I tend to think that all of the methods and all the principles kind of agree on the same thing, they just call it by different names. Data sometimes is useless because it doesn't tell a clear story, it's not indicative of anything. Evidence for me is data that has been processed. And we are understanding what it means it either supports or refutes hypothesis. And it evidence is something we can base our decisions off data, sometimes not so much.

Lily Smith:

Yeah. Okay. So be thinking about the goals aspects, then what are the attributes of kind of good evidence, guided goals?

Itamar Gilad:

So the obvious answer, which will not show anyone is that we need to focus on outcomes not output. Because once we switch to output, obviously, people stop looking to learn, stop trying to find evidence in to evaluate idea, there's the focus on execution. Even with outcomes, we need to cut down the number of outcomes we give to a team or to group because we really are not able to move on on many outcomes. At the same time, it's really hard to actually find ideas and move the needle, as we saw from the statistics. So it's best to trim down the number of outcomes. And the third thing is to try to connect the outcomes that the teams are working on, all the way up to the top level outcomes of the company. And those are usually called the Northstar metric, which measures how much value we're trying to create for our market. And I propose that we need to have an equivalent one for the business, which is the top business metric, which is how much value we're capturing back from the market, whether it's be revenue or profit or market share. I think it's good to, to pick one. And then we need to create some sort of metrics hierarchy that connects all these things together. When a team evaluates a deer's do not just comparing them against a local metrics, they can also see the impact all the way up the chain. And they can make better evidence based decisions if you like.

Randy Silver:

I don't want to spend too much time on goals because there's a lot of other stuff to dig into. But just one question, just set the context for the rest of the discussion. Who sets the goals is are these things set within the product team? Are they set with business stakeholders? They set it at the senior management level? What's the context that we're putting the rest of the framework in?

Itamar Gilad:

So I think the goals discussion, the outcomes is really where you want the managers and the stakeholders and all the people that are involved, to get involved and to voice their opinion to agree this is really the outcome words we want to chase not ever In the company, the ones that are relevant to this decision, I think the good the best practice is usually that the objectives or the intent of what we're trying to achieve comes top down, usually based on the business strategy of the company. But the key results come bottom up. So either which key results we're going to measure, or what are the targets, it's always in negotiation, we need to meet in the middle, and kind of agree, what is a good achievement by the end of the cycle? Usually a quarter, what's ambitious, yet achievable. And focus on just one, two, maximum four key results that this particular team is is trying to move this quarter.

Randy Silver:

So that moves into ideas with, you're trying to create an environment where you've got the trust and the the relationship with people that this team, the product team itself, is coming up with ideas that are credible, that are believable, that are achievable, is that is that fair?

Itamar Gilad:

How would you rephrase this a little bit? I don't think they do, at least to come bottom up, necessarily. I mean, managers can come up with great ideas, stakeholders can come up with great ideas. Even your mother can give you a great idea. I, I proposed to product managers not to do what I did. For many years, I was a gatekeeper. I was just like, No, no, no, not a good idea. And then, all of a sudden, all from the top came in idea I couldn't say no to and this is what we did for the next 12 months. So I suggest accept all ideas. But give yourself TSA, were willing to evaluate them. And be prepared to evaluate a lot of ideas just like you do with bugs. I mean, when we get bugs, we're, we become good at triaging them very quickly. So you need to do the same with your ideas. But the three hours with ideas is about impact confidence in is, in my opinion, is invented by Sean Ellis. So when we triage ideas, we really want to focus on three key criteria, the impact, which means how much is this idea going to help us move towards the goal. And we need to be very specific about which key result we're measuring against the ease, which means how easy or hard it is going to do to to implement. And it's usually the opposite of effort. And the last element, which is very important is the confidence, how sure are we that our assessment of impact is true, especially impact because sometimes an idea that seems very impactful or very positive turns out to create negative impact. So the confidence is another super important element. By assessing ideas in this way, we're able to slightly detach from our emotions for more excitement about the idea. And we can I've seen how the analysis of impact confidence in is both short term discussion. You know, these brainstorms about which ideas but much much, the results much quicker. And the people came out of the meeting feeling they came up with a much more based decision. still important to understand that impact, confidence and ease are guesses. And the key part is to validate these guesses which is the job of steps.

Lily Smith:

Randy, do you fancy levelling up your product management skills?

Randy Silver:

Always.

Lily Smith:

Are you ready to take that next step and your product career?

Randy Silver:

I thought you'd never ask? Well, you're in luck.

Lily Smith:

mining product runs regular interactive remote workshops, where you can dedicate two half days to honing your product management craft with a small group of peers. You'll be coached through your product challenges by an expert trainer, and walk away with frameworks and tools you can use right away.

Randy Silver:

And they're offering a one time 15% discount for any of their August 2022 classes taking place on August 11 and 12.

Lily Smith:

You can choose from Product Management foundations, communication and alignment or metrics for product managers.

Randy Silver:

Just use the code summer 15. When buying your ticket, that's su M M er one five,

Lily Smith:

find out more and book your place and an August workshop at mine the product.com forward slash workshops so just thinking about the ideas I know like probably most of us are in a position where we get or have too many ideas for each of the different metrics that we want to move but if you are in a situation where you are struggling with ideas, which I've kind of worked with teams for or where actually that has been a bit of a problem. They kind of like stuck in one way of thinking or they're not that you know, they're not used to generating ideas, potentially they've, they've been fed stuff for too long so that they're, they're kind of not thinking creatively. Do you have any sort of suggestions for anyone that's in that situation where they are kind of struggling to think of ways to move the needle as it were.

Itamar Gilad:

So idea generation is a weird thing. When you think you have a bunch of great ideas, that's when other ideas come and compete with them, other people come in, no, you have to do my idea. And sometimes it's a very tough political situation. Because some of these people, it's really hard to say no to. And on the flip side, sometimes your managers expect you to come with their ideas, and you're kind of running on empty, you don't have any good ideas, or they feel they're not ambitious enough. In this latter part, I highly recommend stopping and saying we're not quite ready to try to move on the carousel, we need to go and do a little bit of investigation, a little bit of research, at a minimum, go and talk to our customers. And there are many good techniques. This is slightly outside of just how you come up with ideas. There's many different ways you can analyse data, you can interview customers, you can observe them in field studies, there's a tonne of good ways to generate ideas, you can do a design, Sprint, a hackathon, all of these are good. Once you do have a bunch of ideas in your bank, then the question becomes which ones we should go for. And our natural tendency is to try to pick a winner. And to compete, everyone's trying to compete at their favourite idea will become the winner. And if there are people in the room that have power or influence, it becomes kind of an unfair game. So what I suggest is to stop down this kind of dynamic and say, We don't need to pick one, we need to pick five, between three and five per key result. And all we need right now is not a firm decision that these are necessarily the best ones. Because if we validate, and we can validate it very quickly, that those are not good ones, we have another five in the bank, to replenish those, all we need is a hint, these are the top five. And the hint usually comes from ice. So by ICE scoring them very quickly, we can kind of see how they stack up. But then, and this is a I think a misconception that I see with many teams, it's not about sorting the spreadsheet by ICE Core unnecessarily and following it correctly, we need to use judgement. If we see an idea in the middle of the pack. That seems very promising to us, we should pick that one too. If for some reason the Senior VP is demanding the eighth idea, or how are they will be tested. Let's test that they say they're the key point here is we're not going to invest now nine months in building this thing, we're going to test it and the earlier test will be very, very quick. So it's okay to pick Let's reduce the tension of picking a little bit.

Randy Silver:

Let's talk about the confidence metre that you use alongside that as well, what how does that work? So

Itamar Gilad:

the confidence metre is a tool that I created to kind of help with my own problem, which was evaluating evidence. So what I see a lot is people using weak evidence as kind of a self conviction tool or a tool to convince other that this is a good idea. And I really wanted to rank evidence and to allow some tool, which is what I created to take the evidence we have and give us a number in the range of zero to 10. So it's perfectly fits within ice. And the higher the number, the more confidence we have. So it works a little bit like a thermometer, it goes from very low confidence to high confidence. And it has basically four quadrants or four parts. The very low quadrant of the low confidence is all about opinions. Maybe it's my own self conviction or a bunch of like minded people like me. And we're all convinced that this is a great idea. And maybe we attach it to some theme. It's great because it's about the metaverse or web three. Or it's Greg because Gartner says that in the next five years, there'll be $5 billion investment into this field. Oh, it's great because it perfectly aligns with our strategy. All of these are incredibly low confidence forms of evidence. So many better ideas were built based on the basis of such logic that we can't even decide to launch any idea in full solely based on this, we have to move forward. The next one is assessment. So we talked about one form of assessment which is ice. Other form of assessment is reviews, but good reviews, not this, you know stakeholder quarterly, one on one reviews with your stakeholder with your lawyer with your Marketing Product Marketing Manager Just to see if there are some risks that they can surface in the idea, or some problems that you didn't think of a design review this, these things are good, because other people can help you find flaws in your ideas that you didn't see and kind of detach you from it a little bit. Other forms of assessment or back of the envelope calculation, Business Model Canvas business modelling, in general impact analysis, you can go as deep as you want on these things. And sometimes some ideas will just die at this level, once you do this analysis, you realise that actually, they're not as strong as the first seemed. And maybe they're weaker than other candidate ideas you have, it's perfectly okay to populate here just based on assessment. The next quadrant is about collecting data and facts. So, fact finding data gathering can come from our logs can come from customer interviews can come from deep competitive analysis, it's important to differentiate between two types, there's anecdotal evidence, maybe a sporadic customer request, or one competitor has it, you cannot imagine how many times I've seen companies launch a product just based on the fact that it aligns with their strategy, it sounded good to them, one competitor had it, that's all they needed to do, they went ahead and build the whole thing. Entire strategy is built this way. Not enough, you need to go to market data, which is about much larger datasets, surveys, analysis of all your competitors, and so on. And even that is enough to launch only specific ideas, because the confidence level you'll get is only kind of medium low, just with data. Because you haven't actually tested your idea, we just found that there's some support for it in the market for the concept, you have to go into test and experiment, which is the last quadrant. And this is really where you get higher confidence. But of course, there's different types of tests, this early stage test where we fake the idea, it's a prototype, or fake dog, Wizard of Oz does mid stage tests, where it's rough version of Nigeria, and as late stage tests. And slightly even stronger than this is AB experiments and released tests. So it's really a judgement call. And again, there's no algorithm to replace this, to decide how far you need to go with your idea. To feel that it's confident enough to make a decision either to pocket or to launch it. With miniscule tweaks, it's okay to just do assessment, and it's okay, quick review, let's launch it, let's not spend time testing it bigger, there's, maybe you even need to change some things in an existing feature in a way that you feel is low risk, maybe data is enough. But for most others, we need to go to some level of testing. So that's what the confidence tool is there to help us do to evaluate the confidence we have. And to encourage us to move a little bit forward.

Randy Silver:

It sounds like for something big it was. So new new market segments, new product entirely things like that, before you commit significant build resources, you want to go through an awful lot of testing is that is that fair?

Itamar Gilad:

It's hurtful. Absolutely. For big ideas, you need to do a lot of tests, it's not linear, sometimes you change the idea and you go back stage, etc. But I would say that even if an idea costs a week, if you're able to put it into an IB test, again, if it's a tweak, don't worry about it. But if it's if you see some risks in it, you do an assumption mapping, for example, you see some risks, put it into a B test, or put into some test that is quick, and will give you the validation you need and only then launch it. So it's not just about size, it's more about risk, and about how show our way that we have the necessary data to make a good call.

Lily Smith:

And how do you introduce this kind of scoring methodology with the team? Because I know in kind of the first quadrant, which is the sort of near zero confidence level, you mentioned, you know, just something that aligns with business strategy or sort of is vaguely in the right area. And I can just imagine some conversations with key stakeholders going, Oh, well, you know, I it's aligned with the business actually. So our confidence is like really high and I just believe in it. So and having the kind of charisma or the persuasive power of their personality to be able to kind of railroad the rest of the team into going yeah, okay, let's just do it or, or try it. Is there a kind of a way that you introduce this to get everyone on the same page, and kind of honest with that scoring?

Itamar Gilad:

So there's a flipside to this question. First of all, I expected exactly the same results because we're disempowering them. We're taking their stuff powers to predict the future and to determine what we should build. And I presented this idea to many executive teams, including business stakeholders, and very powerful CEOs. And surprisingly, of all, the presentation this, the confidence level metre, is the thing they love the most. And here's why. Because these people also see a lot of ideas coming from all over from their colleagues from the bottom. And they see really bad ideas being implemented. And everyone is just aching for some slightly more scientific way to evaluate this, especially CEOs and CTOs. Because they need to budget they need to make decisions. And people are just coming to them with shiny, you know, pitch decks. So they absolutely want to embrace this when it comes to turning down your excitement and not forcing the team and allowing them to actually evaluate your idea. That's a transition. Absolutely. For those who don't understand why it's important. One useful analysis is to look at the roadmap for last year, for example, all these ideas we got excited about what happened to them, actually, how many of them created the impact, beyond just launches? What actually changed? How many actually launched on time? roadmaps are Trainwreck, often we don't launch half the things in there, we launched new things that came in. And most of these things don't move the needle whatsoever. So it's about injecting some reality and some real evidence about your process as well. into the discussion, not just opinions, not just come to them with the philosophy and the books really should talk to them euros or dollars, and how much money you're wasting every single quarter on ideas that don't work. You can do that analysis. People like rich Mironov, and myself wrote articles about how you can analyse this and the numbers are staggering. So you can do this cultural change if you're come prepared.

Randy Silver:

I'm sorry, folks, this one was just too good to fit into one episode. Check out next week, same bat time, same bat podcast when we dive into steps and tasks.

Lily Smith:

Hopefully, we will actually be diving into steps as that sounds quite painful. But yes, there's more great stuff to come including my amazing revelation that product managers could be project managers after all, shock that. The product experience is the first and the best podcast from mine the product. Our hosts are me,

Randy Silver:

Lily Smith, and me Randy silver.

Lily Smith:

Louron Pratt is our producer and Luke Smith is our editor.

Randy Silver:

Our theme music is from Hamburg baseband power. That's P AU. Thanks to Arnie killer who curates both product tank and MTP engage in Hamburg and who also plays bass in the band for letting us use their music. You can connect with your local product community via product tank, regular free meetups in over 200 cities worldwide.

Lily Smith:

If there's not one near you, maybe you should think about starting one. To find out more go to mind the product.com forward slash product Thank you