In this case study, Dmytro Prosyanko, Product Manager at McKinsey & Company, Dr. Andrew Pike, Data Scientist, and Danylo Pavliuk, Product Designer at Temper, discuss why effective collaboration between product, data science, and design is crucial for successfully developing next-gen smart tech products.

Overview

The focus of this case study, co-authored by all three of us, is based on our own experience of working on Buoy at Buoy Labs, an AI-based home water controller and leak detection device. Through novel discoveries and lessons learned, we intend to leave you with three key insights:

- How to build mutual trust between consumers and smart home products

- How to maintain a positive user experience when AI-based decisions could impact the day-to-day life of the user

- How data-driven design can solicit and engage users to help improve the product

A Bit About Buoy

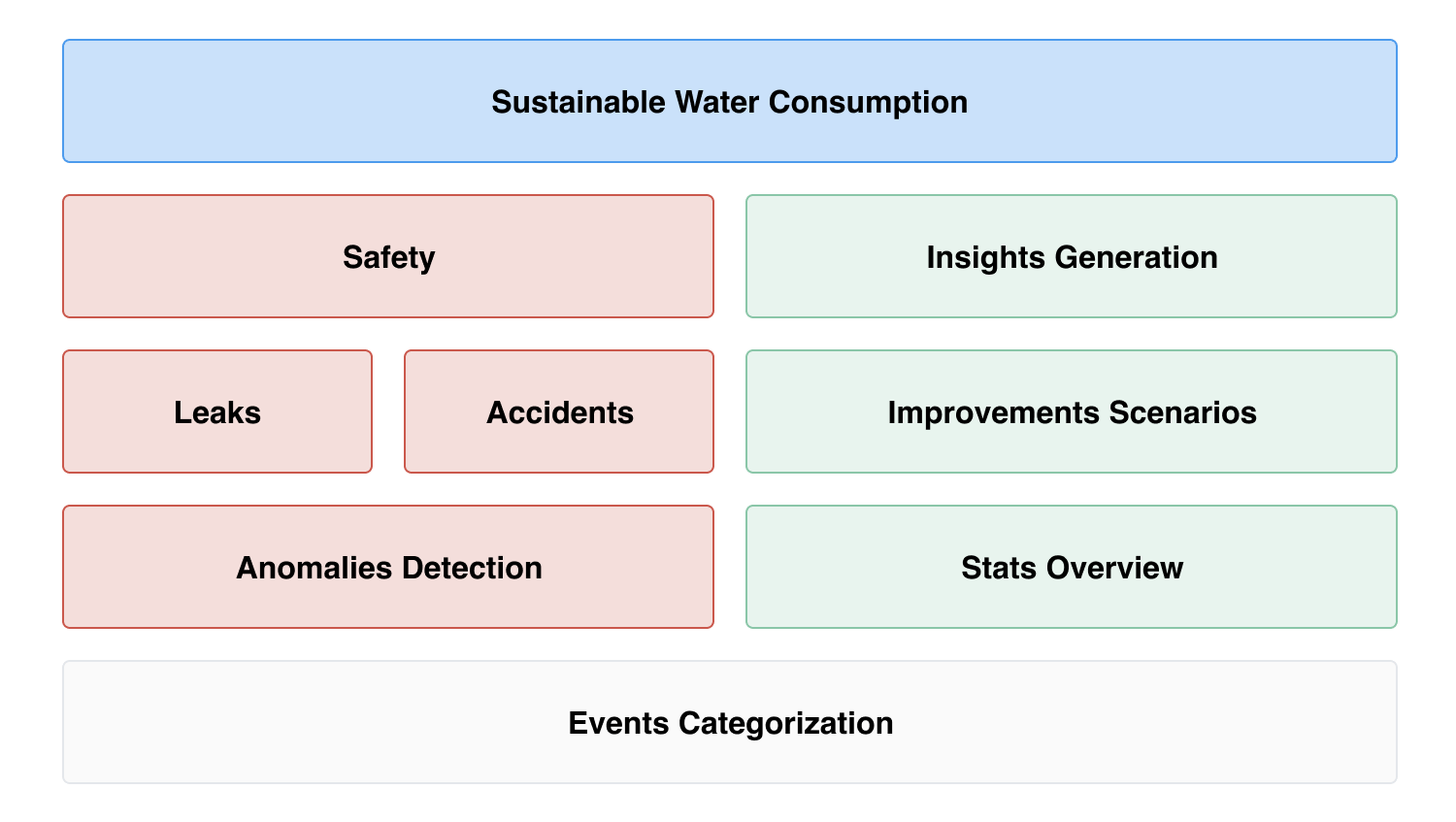

Buoy is a whole-home water monitoring and leak detection device that can be installed on a residential property's water main by a professional plumber, just like a standard water utility meter. It measures water flow at the point of entry, sends the data to the cloud, and converts that data into actionable insights. Two main smart features distinguish the product:

- It categorizes the type of water usage (e.g. dishwasher, sprinklers, toilet flush) using machine learning

- It detects leaks and other anomalous water usage (e.g. running faucet).

If Buoy detects a possible leak, it alerts the homeowner via their smartphone. They can then activate an integrated valve to shut off the water, potentially averting a damaging and costly disaster.

The Approach

First, the Buoy product team laid out a straightforward vision:

Minimize wasted water in residential homes due to inefficient appliances, accidental overuse, and damaging leaks

The US Environmental Protection Agency estimates that more than 10% of household water is lost to leaks, and the agency even promotes an annual fix-a-leak week. But many homeowners don't know where to begin. Buoy's goal is to empower homeowners to conserve water in a sustainable way by providing insights into their water consumption and catching leaks as they happen.

- Detect all leaks, big or small, as quickly as possible

- Categorize water usage with maximum accuracy (strive for 100%)

- Engage users to provide feedback on whether predictions were right or wrong

However, the team was constrained by several additional requirements:

- A seamless user experience that feels like magic: The product should work straight out-of-the-box without requiring user input

- Everything must happen in real-time: If a pipe suddenly springs a leak, the algorithm needs to rapidly detect the problem, issue a timely alert, and prompt for action

- Users should want to interact with the app: They may wish to review their water consumption data, get a breakdown of their usage by category, or learn personalized tips on how to save water

Achieving these objectives while satisfying the additional constraints proved to be challenging.

How the Product Works

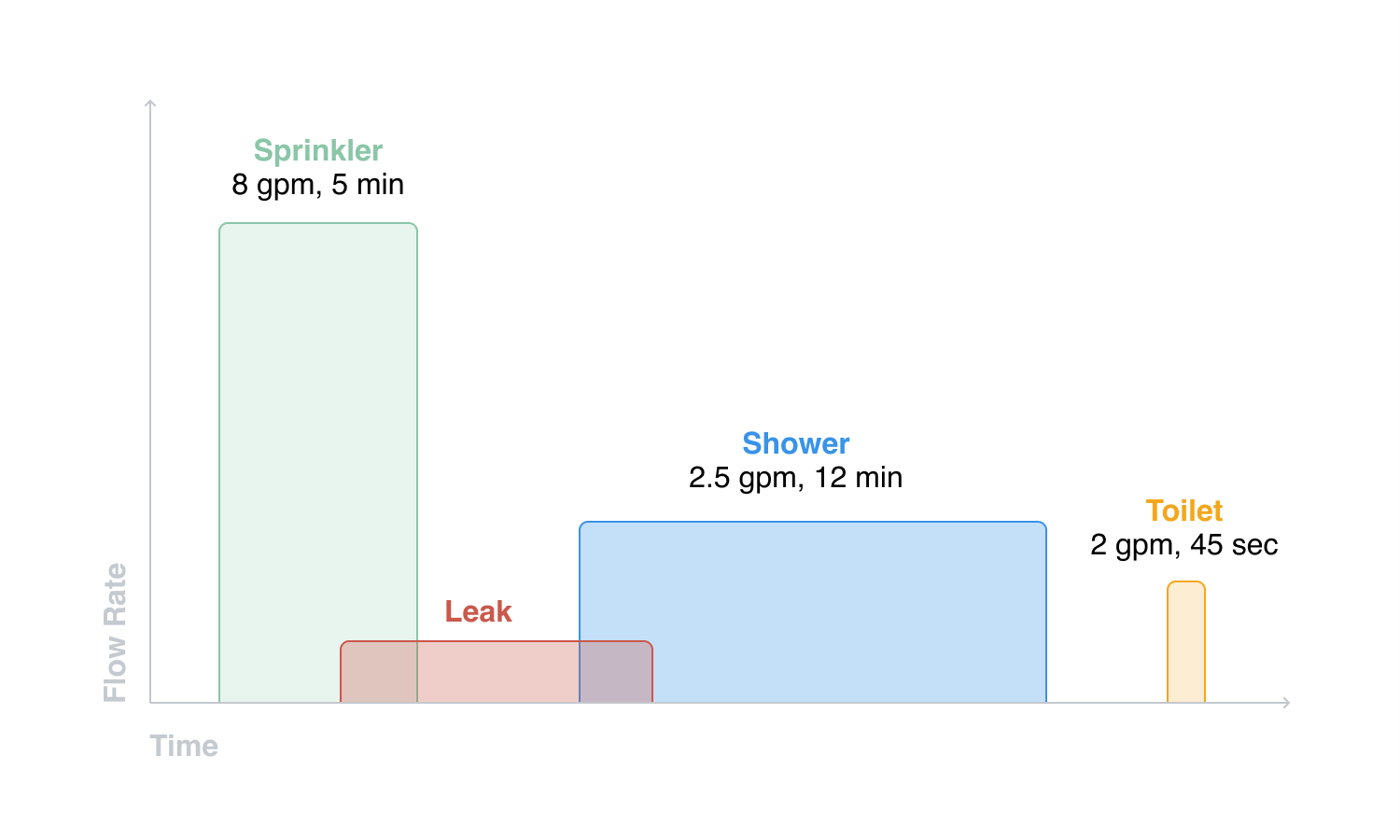

Machine learning is at the core of Buoy. It's what allows the product to differentiate a shower from a sprinkler. The algorithm relies on pattern recognition to learn the unique signature of each water fixture in the home. For example:

- A toilet's flush has a fixed volume

- Showers run at a specified flow rate

- Sprinklers often cycle at the same time of day

The Data Challenge

We initially chose to ask for a two-week learning period as a way to provide quality results without demanding too much from the customer. The alternative was to ask users to actively calibrate the device to their home. We assumed that asking a homeowner to run around the house flushing toilets and running their washing machine was a surefire way to kill the AI magic!

However, machine learning does benefit from user feedback. Tracking and improving our accuracy was only possible if users were willing to provide us with ground-truth information.

As a starting point, the app allowed users to confirm whether Buoy was correct, or to recategorize if it was wrong or gave a false alarm. The more users validate their data, the better the results will be in future.

When it came to data, we faced a major challenge: how to balance "it just magically works" with our need for user-verified data? This was a classic chicken-and-egg problem. Training an accurate machine learning algorithm requires clean verified data. Yet that data is obtained from users who expect accurate machine learning results in the first place. How could we get the data we needed from real houses in the field to make a functional AI-based product?

Towards Functional Solutions

It was clear we needed lots of users to tell us how they were using their water. The most straightforward way to achieve that was to dramatically increase the household installation base. Unfortunately, that wasn't a viable short-term solution and so we relied on our current user base.

We considered ways to relax our constraints while still delivering value. Our users were early adopters, after all – perhaps they would be willing to help train the product?

First, we reconsidered the idea that the product needs to magically categorize water usage out of the box without user input. We wanted that user input.

Data, especially early data, is immensely valuable. It would improve the product for everybody else. We already had a feature where users could provide feedback through the app. While some users did this, many did not.

Searching for more ways to solicit feedback from our users, we found a clue in our support tickets. Our in-house support staff discovered that customers were surprisingly open and forthcoming about discussing their water usage. Each leak a customer experienced was a real-world story that had affected their life.

People talked about watering their gardens, their showering habits, their laundry routine, bad nights spent on the toilet, neighbors stealing their water, pets chewing through rubber hoses, kids severing sprinkler heads with the lawnmower, housesitters discovering a catastrophic leak while the owner was on vacation, and plenty more.

The realization that some users were eager to share extremely private information about their water consumption was a pivotal point for us to come up with a workable solution to our data collection problem.

The answer was data-driven design and strong UX. We needed to better understand our users and their motivations for providing us with their personal data. Only then could determine who is likely to give us feedback. This, in turn, would help us create an intuitive interface allowing users to easily provide that feedback while also keeping them engaged and interested in their data.

Understanding Users

Of course, not all users are the same. They have different motivations for using products and varying levels of enthusiasm.

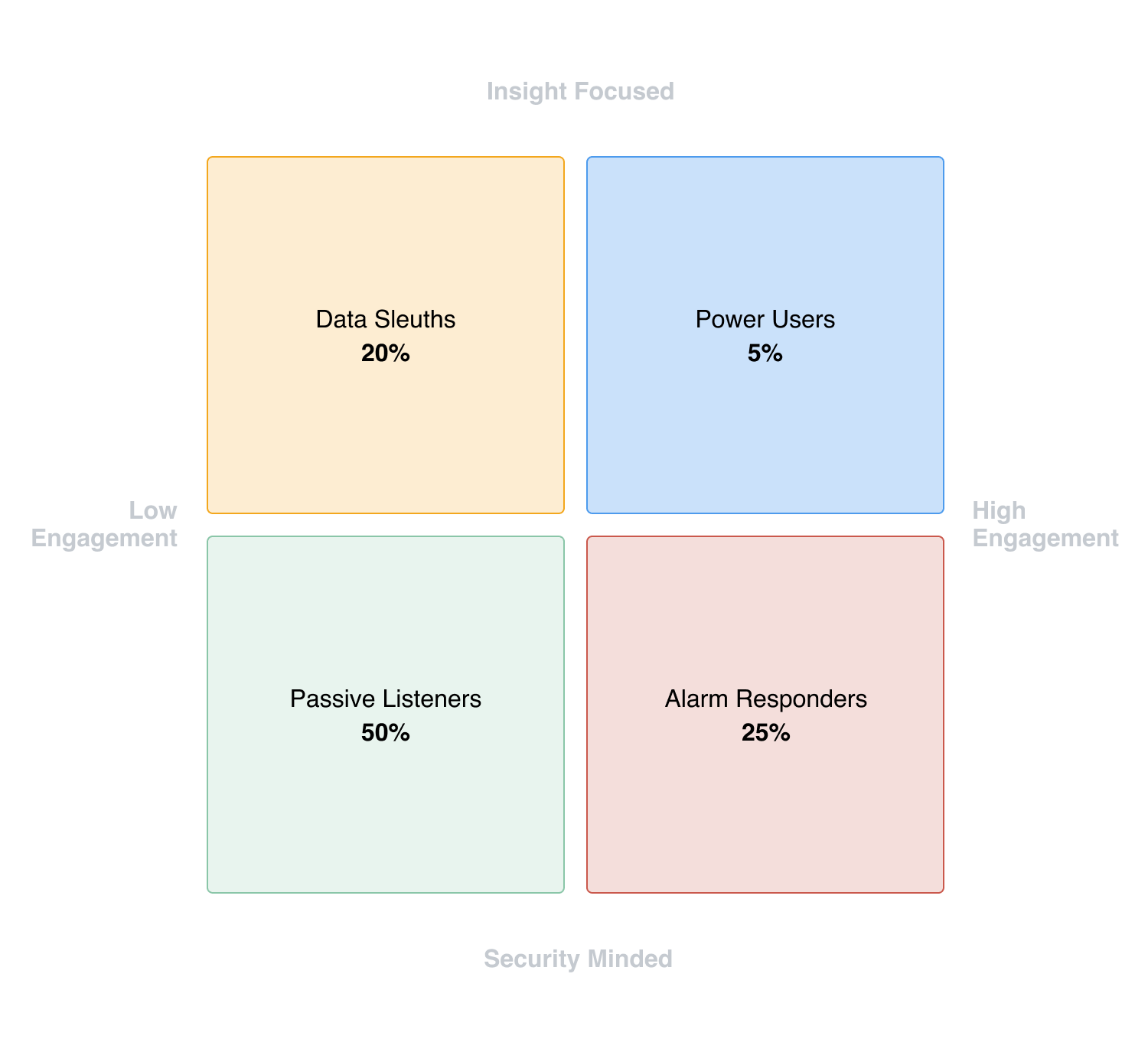

Taking a data-driven approach, we analyzed user data to infer their motivations and behavior. This included metrics like how often they categorized events, responded to leak alerts, and otherwise engaged with their data in the app. Based on this analysis, we were able to divide users into different cohorts and estimate the membership.

We grouped users based on two axes:

- Motivation (security/insights)

- Engagement (low/high)

- Passive Listeners 50%: Security-Minded, Low Engagement. Users are primarily interested in using Buoy as a passive leak detection device. Rarely interact with the app and are not likely to provide feedback.

- Alarm Responders 25%: Security-Minded, High Engagement. Users are actively interested in Buoy's leak detection capabilities. They respond to leak alerts and provide feedback.

- Data Sleuths 20%: Insight-Minded, Low Engagement. Users are interested in gaining insights about their water usage. They may provide some information upfront, but interact less as time goes on.

- Power Users 5%: Insight-Minded, High Engagement. Users are highly interested in their water usage data. They frequently interact with the app and provide feedback. Although rare, their constant feedback is invaluable.

Data-driven Solutions

Based on our understanding of users, about 50% were willing to provide some sort of feedback. The challenge was how to get as much information as possible from each type of user. What could be done further to benefit most from active users? And could we convince passive users to engage more? This led to the following design objectives to increase data sharing:

- Allow users to provide more information on water appliances in their homes (home profile)

- Allow users to easily leave feedback (event re-categorization)

- Incentivize users to engage with the app and increase the amount of user-confirmed data

Another interesting strange-but-true insight that we observed: users were 5 times more likely to tell us that Buoy was wrong than that it was right. That is, users were mostly recategorizing and/or correcting false leak alerts rather than confirming that the product was working as intended. This finding became the central theme of our future design decisions.

Our basic approach was to prioritize user feedback by simply presenting users with our best results and asking them if we'd got it right. In other words, machine learning does the guesswork based on past learning, highlights the uncertainty of our predictions and offers second and third-best guesses then asks for final selection and confirmation. We sought to engage with users in the right way, at the right time, and with minimum information, and offer two simple options: confirm or reject.

Home Profile: Improving Learning Time

How much information were users willing to provide about their home?

Ideally, we want to know exactly which fixtures and appliances are in the house. That means every toilet, sink, sprinkler, garden hose, etc. should be identified to better categorize a home's water usage and reduce false leak alerts. This is especially helpful if the user has uncommon types of appliances, such as water softeners, whole-home humidifiers, and evaporative coolers.

We call this mapping a home profile and this was the first area to improve. Instead of waiting for two weeks to learn a home's fixtures and appliances, we'd give the user an option to respond to a short survey while onboarding and then map it manually.

However, we struggled with several difficult realities of implementing this feature.

- Surveys suck: Users were likely to drop out if it was inconvenient

- We'd not yet established trust: Users can be reluctant to provide personal data to a new service

- Magic requires illusion: The "magic" experience we were striving for is hard to achieve when you reveal how the trick works

This is where our guiding idea of trusting the user kicked in. That is, instead of requiring users to complete a house profile, we asked them if they'd like to.

If they did – great, we could speed up the learning process. If they didn't – fine, we'd take time to build the profile, show it to them and ask if we got it right.

We want to stress that we considered it essential that users were not obligated to share. We respect privacy and we wish to promote data transparency and keep our users in control of their data.

Ever-Changing Homes

We were happy with our idea of a home profile but, of course, homes are constantly changing. What if a customer remodels their bathroom with new fixtures, or upgrades their washing machine? How would Buoy know?

We needed to achieve active learning.

Each new appliance and fixture has a distinct pattern. For example, a front-loading washing machine uses water in a very different way from a top-loading washing machine. If we knew a customer had recently installed a front-loading washing machine, we could compare it with data from other washing machines and categorize it with higher confidence (rather than falsely think it was a leak).

We approached the problem by using probability. In the field of machine learning, we often deal with likelihoods rather than absolutes. That is, nothing is ever black or white, but always some shade of gray. Our predictions should reflect how certain we are that an event can be categorized as one thing rather than another likely category.

To achieve active learning, we would assign a category based on our algorithm's best guess, show it to the user and ask if we were right (we'd present second and third best guesses too). In a situation where we were uncertain about a category, which would be the case with a new appliance, we'd communicate that uncertainty to the user and prompt them for their input.

Incentivizing Engagement

We love our power users and their enthusiasm to push Buoy to its full potential, but not everyone has the time to continually engage with the product. We had to deliver value for other low-engagement user types, which in our case were Passive Listeners and Data Sleuths (70% of all users), and encourage them to interact with their data. We did that by merging the home profile and event recategorization into one experience using a scoring-based technique.

In the best-case scenario, where a user tells us precisely what fixtures they have in their household, the certainty is nearly 100%. Since it's confirmed by the end user, we would assign an affinity score of 5 — the highest possible.

In the opposite situation, when we have zero confirmation by a user and thereby default to the algorithm's guess work, we assign a score of 1 — the lowest possible. Based on this engagement scale, we could let users know where they currently are and what they can do to improve the machine learning algorithm's accuracy.

We called this feature Improve Home Profile. It's a way to take users through a flow interface and inform them about the fixtures and water usage events that Buoy is most uncertain about. This allows us to save users' time by only asking them the most important questions to get the information we need to improve our algorithm.

Conclusion

We cannot emphasize enough the importance of listening to your customers. The "users are always right" mentality forged several of our product initiatives:

- Home Profile

- Requesting feedback to actively learn

- Incentivizing engagement

By introducing the home profile, we could improve Buoy to categorize almost out-of-the-box rather than taking two weeks.

The push to encourage users to actively confirm/change categorization also helped to decrease learning time and increased user satisfaction. Initially, it would take at least one week to get feedback from the end user (review support ticket, make a call, etc.) but a direct ask from the system to the user on uncertain events helped reduce that to almost immediate learning.

Engagement incentives also enabled faster adoption of smart features. Users with lower engagement scores received less "smart" benefit from the product. However, with a little initial engagement and help along the way to improve uncertain predictions, users could rapidly reap the benefits of their AI-powered device. Again – the user is in control and their engagement only serves to make the product more "magical".

Lastly, part of the "magic" of working to develop a small startup product like Buoy is the unparalleled cross-team interaction. There is little room for error in the startup work, but lots of room for collaboration. Avoiding "silos" and working together as an interdisciplinary team that listens to each other is crucial for success.

In our case, working across disciplines (product, data science, and design) was an exercise in fostering understanding and challenging each other with common sense and good reasoning. We learned what did and didn't work for our customers in a data-driven way and were able to rapidly iterate and adapt our vision to the reality of the situation. For us, the overlap of Product, Design, Data Science and Engineering is the place where true magic happens.