As artificial intelligence (AI) becomes a more ubiquitous function in many key user touchpoints in the products we build, it is essential to ensure user feedback remains central to key stages of development. Thoughtfully designing AI tools that can interpret user inputs and return valuable information requires an emphasis on three foundational principles — or three F’s: familiarity, fallbacks, and feedback. These elements not only create a framework for trust and reliability, but also ensures continuous feedback while navigating the still nascent AI product development landscape.

In this article, we’ll explore how to design AI features that prioritise user needs. Although the principles apply broadly, we’ll ground them with specific examples—particularly in applications involving large language models (LLMs), where users input data to receive tailored insights or recommendations. Examining these interactions helps reveal ways to enhance usability, build confidence, and improve system effectiveness through user-centric design.

Familiarity: Establishing trust and transparency

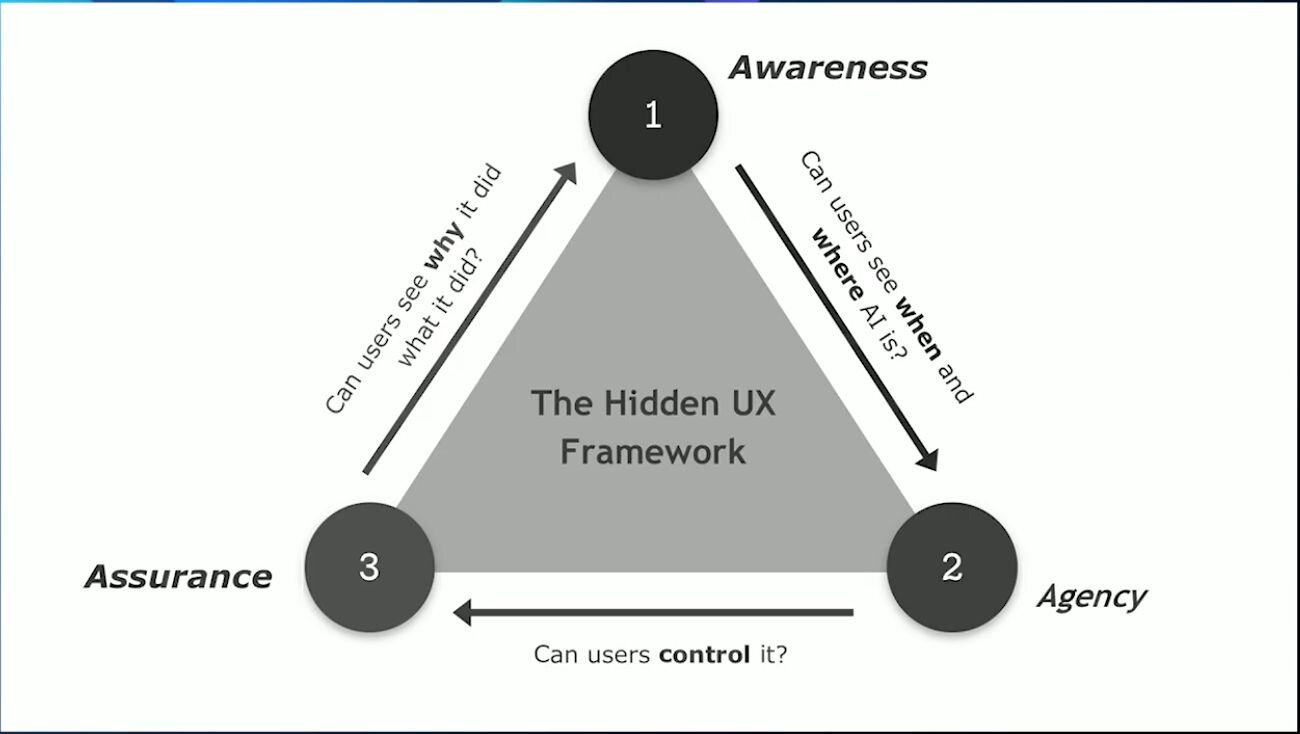

Building AI products with a foundation of trust is critical. Unlike conventional software, AI decisions can feel opaque or unpredictable. Providing transparency into how AI functions and making its decisions understandable fosters user confidence and mitigates concerns about accuracy. Users need to feel confident that the AI is reliable, fair, and respects their data and inputs. Trust is the cornerstone of user adoption and long-term success for AI-integrated features.

Transparency and explainability

Users often find it challenging to understand how AI systems generate outputs. Trust grows when users have insight into how the AI works, the data it relies on, and why it produces certain results. To build this trust, consider:

- Ethical and regulatory compliance: Establish that your feature or product has robust data privacy and data protection standards and their adherence to legal standards.

- Ranking and fairness: Clearly communicate why specific results appear and how they are determined.

- Bias mitigation: Address and reduce biases within the AI model—such as gender or racial biases—and clarify how users can adjust inputs to minimise potential bias in outputs.

- Explainability: Make explainability an integral part of the user experience by providing clear, accessible explanations of the AI’s decision-making process. Educate users on effective ways to interact with AI to optimise results.

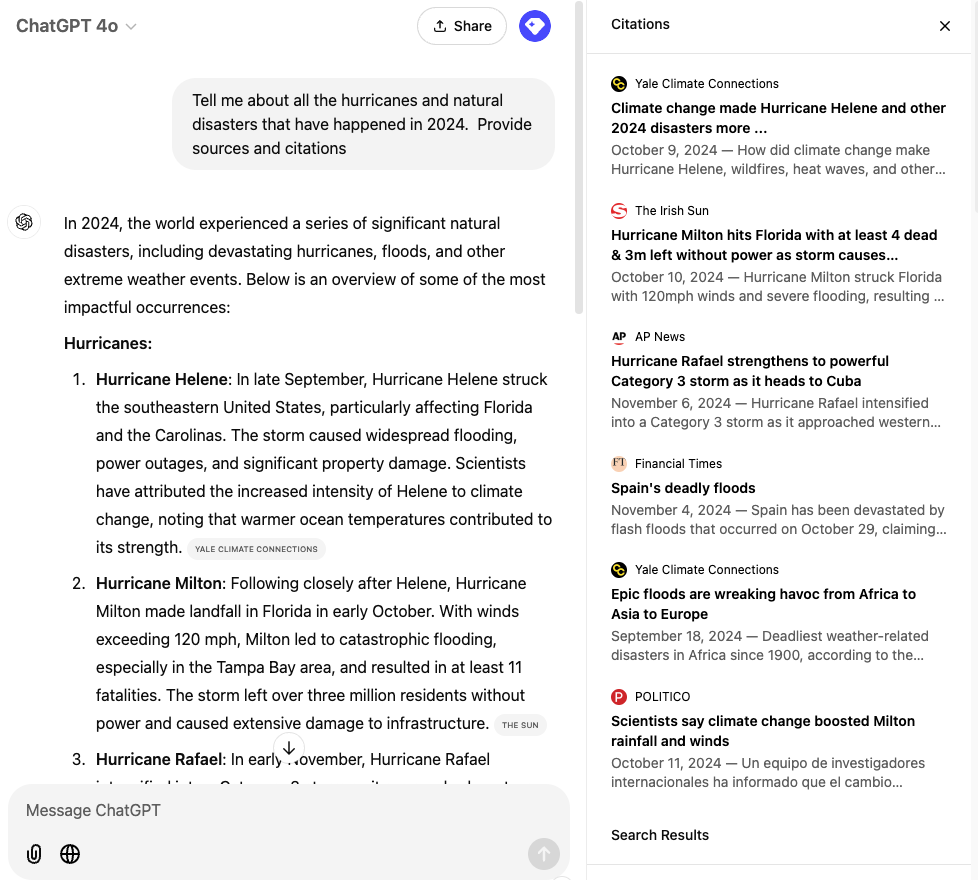

Citing sources

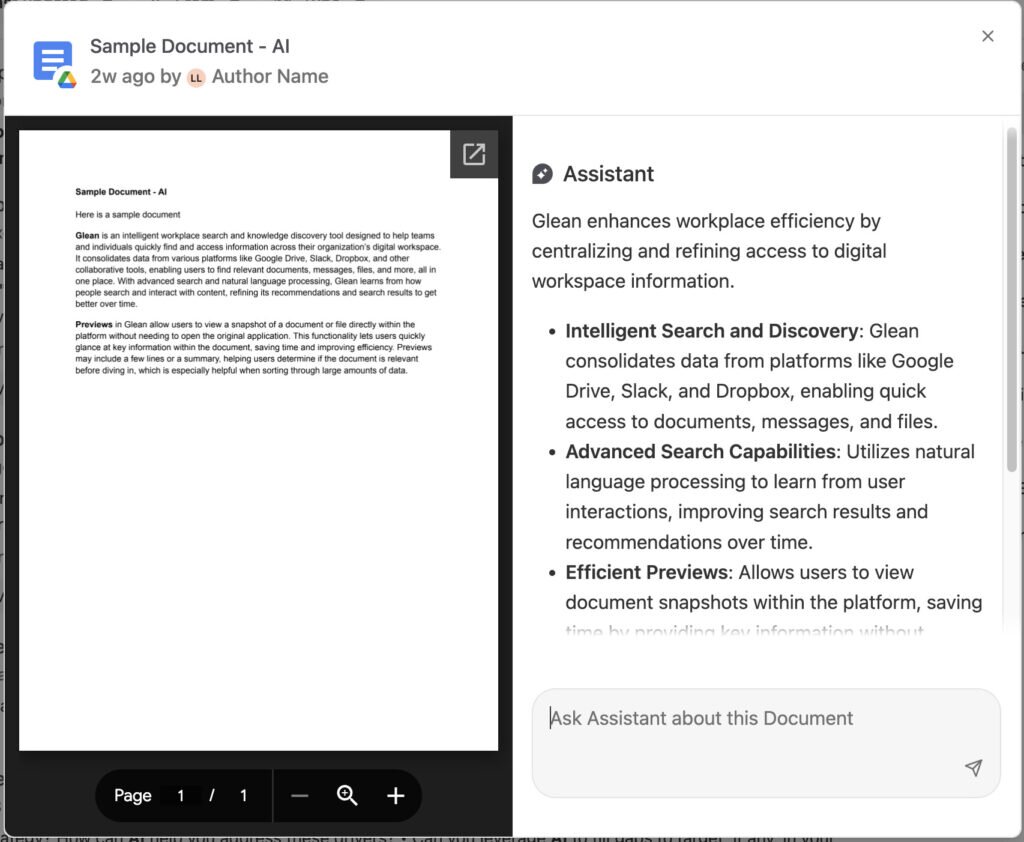

Transparency around data sources is another key trust factor. Users often want to know how information was gathered to generate insights or decisions. When possible, offer clear visibility into the origins of the data informing the AI’s responses. For example, showing relevant links or previews of source text can reassure users that outputs are grounded in reliable data. Clarifying whether the AI relies on publicly available data, proprietary sources, or user-specific inputs helps users feel confident in the objectivity and relevance of the information.

Building familiarity and transparency is essential for fostering trust, reinforcing user confidence, and grounding AI outputs in clear, reliable information.

ChatGPT 4.0 Enterprise showing sources of its insights – Screenshot as of November 11, 2024

GleanAI Enterprise, allowing users to verify the summarisation with the original source document (Screenshot as of November 24, 2024)

Fallbacks: Safeguards for complex scenarios

When AI systems encounter complex scenarios or produce uncertain outputs, robust fallback mechanisms are essential for both functionality and user trust. Fallbacks are particularly valuable when the AI faces scenarios outside its programmed scope. Simple queries may benefit from default responses, while more complex issues—like customer complaints—might warrant human intervention to ensure users feel supported even when the AI cannot confidently respond. These backup processes not only ensure that the AI features have an alternative should functionality fail, but instead redirects the user or clarifies when it cannot confidently provide the correct output. These mechanisms ensure that AI doesn’t fail outright but instead offers alternative support. Fallbacks may include:

- Default responses: Provide reliable default answers or redirect users to helpful resources when the AI cannot produce a confident output.

- Manual alternatives: Allow users to solve the problem manually if possible.

- Human support: Offer human support or customer support systems as a fallback in complex situations.

Imagine a feature where AI validates information to complete a time-sensitive transaction. Delays in validation can significantly impact the user experience—potentially raising costs if delays increase fees, causing users to miss subsequent appointments, or simply creating frustration if this is the only way to complete the service. Building fallback mechanisms or options for quicker validation helps maintain user satisfaction and prevents these issues from disrupting the experience.

Key areas for fallbacks

Within the communication of fallback mechanisms, it is important to pay attention to and address key concerns in reliability, control, and performance

- Reliability: Ensure the AI performs consistently across diverse scenarios, delivering accurate, actionable information. Consider what reliable alternatives you can provide if the AI’s answer is uncertain or incorrect.

- Control and autonomy: Allow users to adjust AI settings, such as sensitivity levels, to give them control over the AI experience. Users who feel empowered to adjust settings are more likely to trust and engage confidently with the system.

- Performance: Optimise response times and accuracy. If performance falters, such as during slow response times or misinterpretations, consider alternative ways to maintain user experience.

Robust fallbacks help to support central issues of reliability and trust, providing support when the AI encounters uncertainty or complexity.

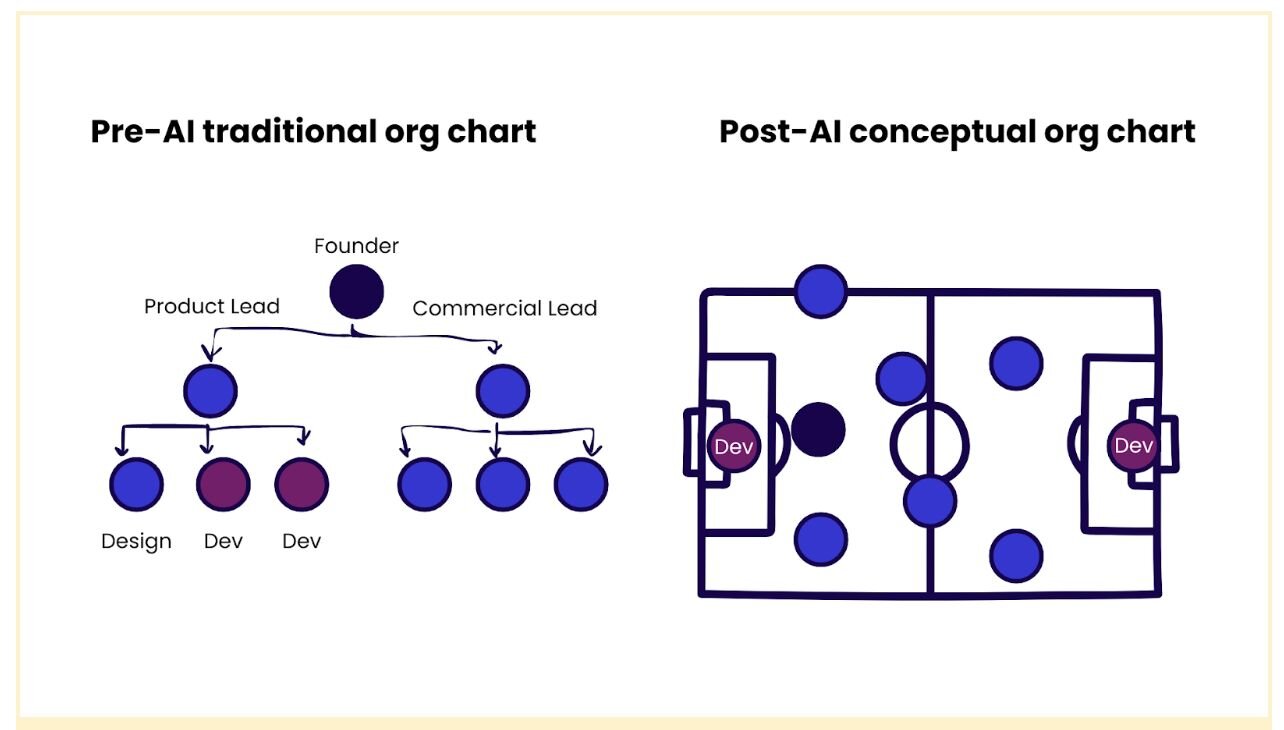

Feedback loops: Continuous improvement

Are feedback loops part of your product development strategy? In these early stages of AI feature development, implementing feedback loops is crucial. Product teams must actively research services that facilitate this and evaluate implementation timelines. Key areas to focus on include prompt version control, input management, and feedback corrections. With clear oversight of these components, you’ll gain a deeper understanding of whether your AI system is meeting its intended goals.

Imagine you’ve built a summarisation tool that processes loosely structured text from users. The technical ecosystem behind this tool would include: the prompt crafted by your product team, the user-provided input, the AI-generated summary, and, potentially, the user-edited final version of that summary.

To ensure quality, you’ll need to track how each component performs throughout the user journey. This enables you to identify problems like poor summarisation due to prompt flaws or hallucinated outputs. In this workflow, the user-edited version acts as a form of real-world QA—although thorough testing should always occur before launch.

By embedding feedback loops into your process, you’ll not only improve the quality of your AI features but also foster trust and alignment with your users’ needs.

Version control for prompts

Effective version control of prompts is essential to maintain consistency and reliability in language model applications. It is also crucial to strike a balance between addressing specific edge cases and covering the general use cases that make up the majority of interactions. Overloading prompts with edge cases can dilute the model's effectiveness in handling the primary scenarios that constitute the majority of cases. To avoid compromising overall performance, implementing robust fallback mechanisms discussed earlier can help manage rare cases without detracting from the system’s ability to accurately and efficiently serve broader user needs.

Insight into user interactions

Implementing thorough monitoring of inputs and interactions is crucial for understanding the flow and effectiveness of your language model. By capturing every interaction—prompts, queries, inputs, and outputs—you gain a detailed view into how the model responds at each stage. This insight is invaluable for identifying and debugging issues, evaluating performance, and refining the model to improve the user experience. Such comprehensive tracking not only enhances transparency but also supports continuous optimisation, ensuring the model aligns more closely with user needs and operational goals.

For both version control and input monitoring, services like LangSmith provide robust tracking solutions, ensuring that AI interactions can be easily refined based on performance insights.

Now, imagine a tool designed to assist researchers with thematic analysis of text inputs (interviews, notes, etc). Is the analysis conducted directly on original inputs? Do users modify the analysis during subsequent submissions to better highlight themes that the initial prompts might have missed?

By understanding how users interact with the system—tracking their inputs, the prompts used, and any modifications made—you can better inform future iterations of the tool. Allowing edits to the final output offers a built-in quality assurance mechanism, providing a clearer understanding of the user’s intent and helping you refine the model to meet those expectations.

Comprehensive monitoring and iterative improvement are the foundation for building language models that deliver both accuracy and relevance, ensuring they remain effective and user-focused over time.

Writing/righting wrongs

While AI functions are often well-intentioned, they can sometimes produce incorrect or incomplete results—and that can be understandable. With correct onboarding and communication, users generally understand that certain scenarios are complex and may have nuances that AI can’t fully capture. However, it’s crucial to provide mechanisms that allow users to report inaccuracies. For instance, if an application provides wrong information, is there an easy way for users to flag it as inaccurate so it can be reviewed and improved upon? Welcoming feedback and actively encouraging users to report issues demonstrates a commitment to quality and user experience. This approach is particularly useful in situations like poor language translations or incorrect entity matching, where users can contribute to refining the accuracy of the AI. By making it clear that feedback is valued, you not only enhance the product’s reliability but also foster trust and show users that their input matters in shaping a more responsive, user-centred AI.

—–

User testing and research

In AI product design, being attuned to users’ emotional responses is even more critical than in typical usability testing. Emotional cues–whether expressed through hesitation, uncertainty, or subtle questions about how the AI operates–can reveal underlying trust or discomfort issues that may not be explicitly stated. For instance, a question like “Is this from my text that I uploaded here?” might signal deeper concerns about hallucinations or credibility. Recognising and exploring these emotional signals allows designers to address the root causes of user friction, building an AI experience that is both more trustworthy and empathetic. By actively looking for these cues, you can identify where users might be overly trusting or skeptical, helping to inform more balanced and user-centric design adjustments.

Effective feedback loops are essential in any product development process to drive continuous improvement. Investing in the right tools, infrastructure, and practices ensures user perspectives can be captured in context in every step of the process.

Concluding thoughts

In designing AI features that users can confidently engage with, fostering familiarity, establishing effective feedback loops, and implementing reliable fallback mechanisms are essential pillars. While this approach is not vastly different from general user testing, it does place additional emphasis in key areas when dealing with AI. Building familiarity cultivates trust, as users need intuitive, consistent interactions to feel comfortable inputting data and relying on AI-driven insights. Fallbacks act as safeguards, ensuring that if the AI encounters edge cases or uncertainties, users still receive reliable responses rather than errors or overly complex outputs. Lastly, feedback loops allow for continuous refinement based on real user interactions, enabling the AI to better meet user needs over time while addressing potential areas of confusion or misinterpretation. The software needed for this may extend beyond the technical stack available in your company and may require additional sourcing and time to implement.

As AI continues to evolve, user-centered design principles will remain essential for ensuring these tools genuinely serve and empower their users. Additionally, developing sensitivity in user interviews to capture emotional responses can provide valuable insights into user trust, comfort, and concerns, delivering more effective AI product development. This attention to both technical transparency and emotional resonance strengthens the AI’s ability to address real user needs.

Fundamentally, understanding the problem you are solving for the user—and their underlying needs and concerns—is the cornerstone of creating great products. This process spans various stages of the AI feature development cycle, from prompt engineering to building the supporting infrastructure that powers functionality, to designing the visualised features users interact with. By focusing on these principles, this article aims to highlight key, generalisable areas that help navigate common pitfalls and ensure your AI products are user-centred, trustworthy, and effective.