The rapid advancement of AI has revolutionized image processing, enabling the creation of stunning and realistic images from mere text descriptions. This groundbreaking technology is poised to transform various industries and redefine the boundaries of creativity. However, the advent of AI also raises concerns about responsible governance. Robust safeguards are needed to protect children and ensure ethical use of AI models, including addressing personal data misuse and preventing bias and discrimination. Transparency is crucial for responsible AI development. The tech sector must engage in open communication about AI's capabilities, limitations, and potential risks. By fostering open dialogue, we can harness AI for the betterment of society.

Image generation AI holds immense potential, but its development and deployment must be guided by responsible governance and transparent communication to ensure ethical and beneficial use.

Revolutionizing creative fields

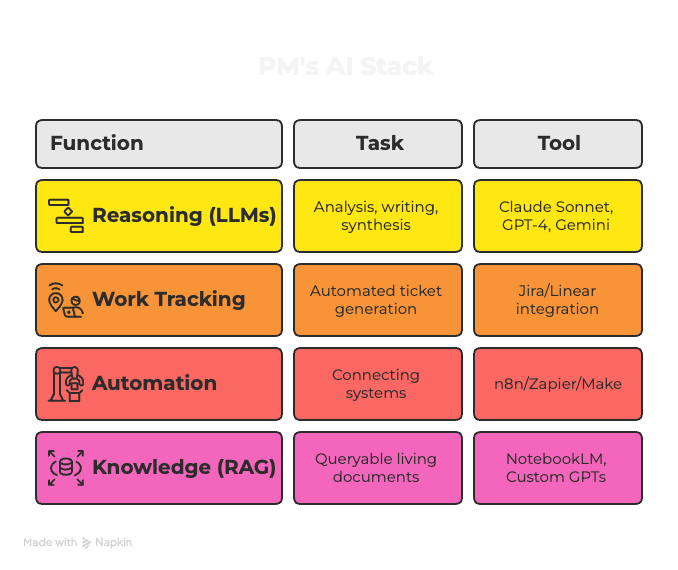

AI is transforming the creative world. New AI models can generate stunning images, write music, and even design products. This is giving artists and designers unprecedented tools to express themselves and create new and innovative work.

Generate cartoon characters from text to expedite creative process

Personalized content creation

Image generation AI also holds immense potential for personalized content creation. With the ability to generate images tailored to individual preferences and interests, this technology could revolutionize user experiences in many domains. For example, e-commerce platforms could use AI to generate personalized product recommendations with accompanying visuals, while social media platforms could enable users to create unique avatars and profile images.

Ethical considerations and challenges

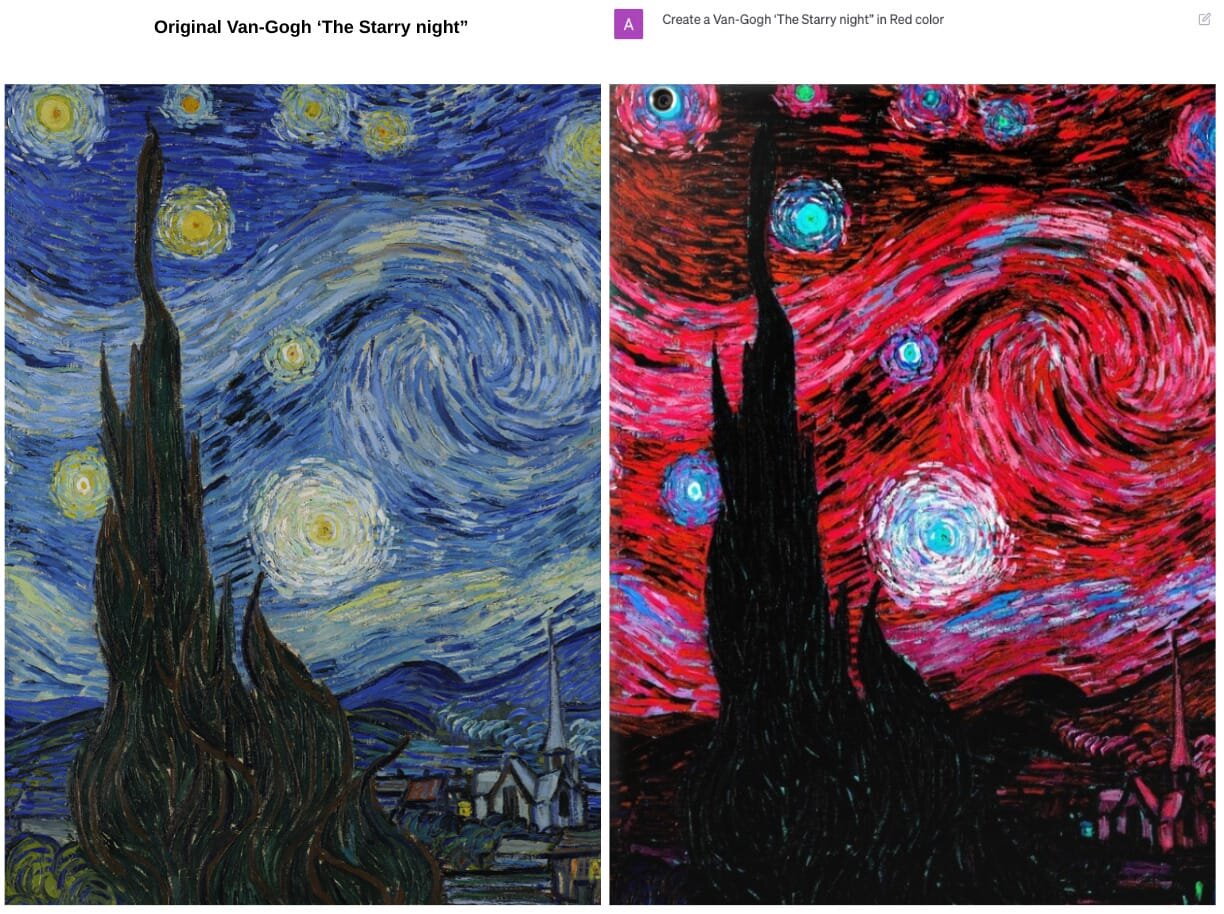

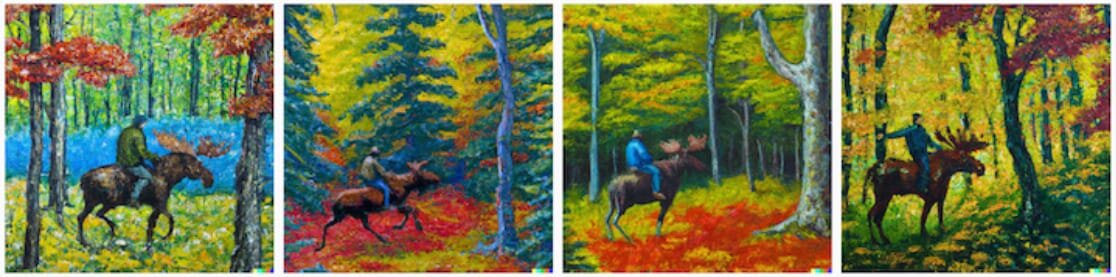

While the potential of image generation AI is vast, it's crucial to consider the ethical implications and challenges that accompany this technology. Concerns surrounding potential misuse, such as the creation of deep fakes or the spread of misinformation, must be addressed through responsible development and deployment practices. Additionally, ensuring that AI-generated images are properly labeled and attributed is essential for maintaining transparency and preventing copyright infringement. Anyone can recreate Van Gogh’s ‘The Starry Night’ within minutes, for instance, without giving due credit.

Responsible AI – the need of the hour

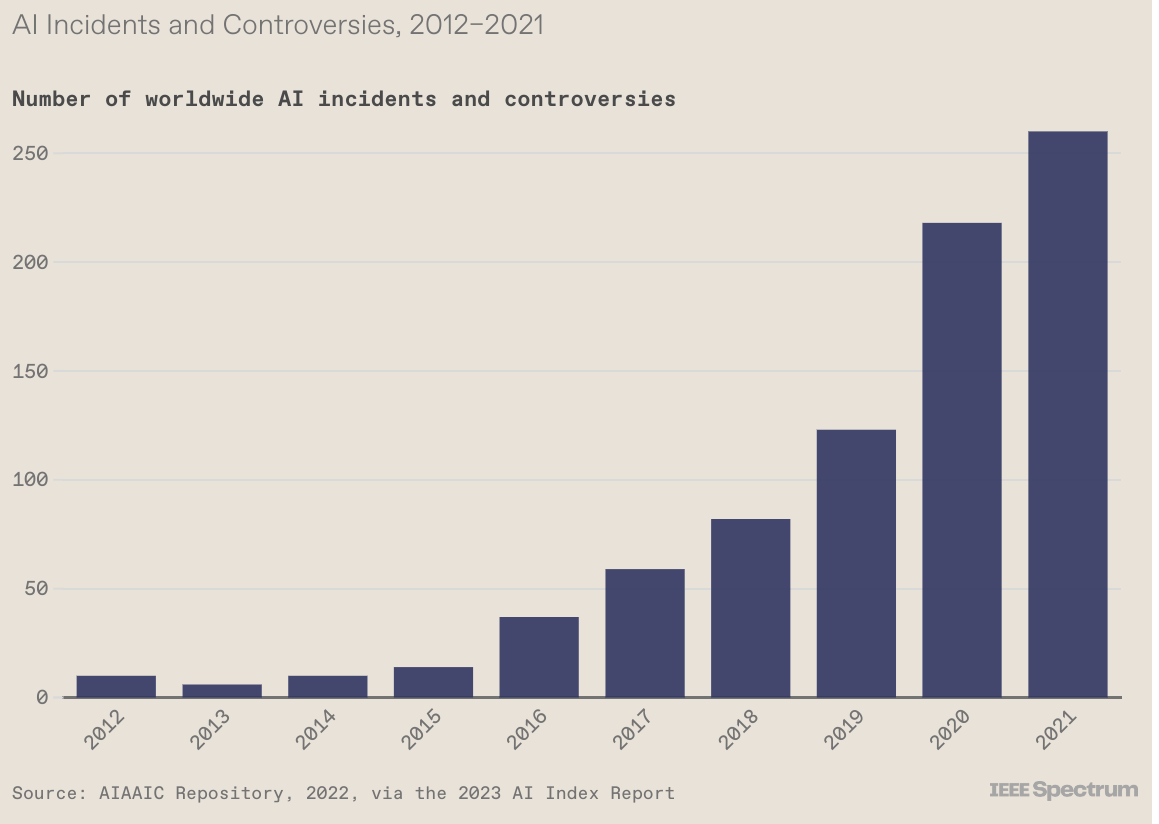

At the moment, newsletters are filled with “AI-enabled" products. But, just because it is possible, doesn’t mean it is good. It is more urgent than ever to ensure these products are built responsibly. It is also governmental responsibility to ensure guardrails are put in place before unpleasant incidents take place.

The good news is that since learning from a series of lawsuits, big-techs have invested in ”ethical AI” and "responsible AI”. Companies like Microsoft and Google have also started efforts to watermark AI-generated images.

Historically, regulations follow technological evolution and big technological companies have been under scrutiny for more than a decade over developments in data privacy and security. I am hopeful that in 100 years’ time AI meets and surpasses the progress and development we marked from the discovery of “fire, electricity and the internet".

Children and sensitive content

However, AI-generated images have the potential to profoundly affect how children interact with the internet and each other. On one hand, AI image generation can be used to create educational and entertaining content for kids. For example, AI can generate historical figures, places, and events to help children learn. It can also create personalized stories and games. However, there are also significant concerns about using this technology to produce harmful content aimed at children.

AI could be used to generate explicit images of child abuse, violence, and other upsetting content. Just as society has built guardrails around things like age limits for alcohol, we need similar safeguards for AI. Policy and rules should be established across countries to protect children from misuse of this technology. For example, AI image generation could be – and has been – maliciously employed to create convincing fake explicit images and videos of children. The anonymity afforded by AI models makes this misuse difficult to track and control. Strict policies are needed to categorize and limit the generation of certain explicit content to prevent potential harm and abuse.

The easy access and integration of AI makes controlling its use challenging. This underscores the urgent need for measures to monitor AI-generated content and restrict its accessibility to children. While the educational benefits are promising, the potential for harm is equally real. A comprehensive, coordinated effort is required to allow AI to enrich rather than endanger young lives. Clear guidelines and consistent enforcement will help achieve this balance.

The graph below summarizes the prevalence of AI misuse and why steps are needed to be taken more urgently than ever.

Models trained on your personal data

Using a model that holds your personal data is almost like having a person next to you who knows all your personal details. This presents both opportunities and risks. The opportunities could allow students to get help with homework or help individuals find exact medical help. However, risks – including privacy violations, misuse of data, and algorithmic bias – still remain.

To mitigate these risks, it is essential to implement rigorous consent processes and data protection measures. Users should be fully informed when their personal information is collected and used for model training, and they should have a choice in how their data is used. Additionally, companies and researchers must take steps to ensure that their models are fair and unbiased, and that they are not misused for harmful purposes.

Here are some of the key risks associated with models trained on personal data:

- Bias and discrimination: If the training data contains societal biases, the model will inherit and amplify those biases. This can lead to unfair and discriminatory outcomes, such as a model that is more likely to predict that a person of color will be a criminal.

- Lack of consent: Using personal data without explicit consent violates individual privacy and autonomy. People should have a choice in how their data is used, especially for sensitive purposes like training AI models.

- Misuse potential: Models trained on detailed personal data could be exploited for harmful purposes like targeted disinformation campaigns or identity theft. Criminals may look to access and misuse such models.

- Loss of control: When AI has deep insights into personal lives gleaned from training data, it could manipulate and exploit vulnerabilities at scale. As a result, people lose control over their information.

Despite the risks, models trained on personal data can offer significant benefits. For example, they can be used to develop more accurate and personalized medical diagnoses, create more engaging and educational content, and improve the efficiency of customer service operations.

To harness the benefits of these models while minimizing the risks, it is important to develop and implement ethical guidelines and regulations. These guidelines should emphasize the importance of consent, transparency, fairness, and accountability. By working together, policymakers, companies, and researchers can help ensure that AI trained on personal data is used for good.

Recommendations for regulations

Here are some specific recommendations for regulations that could help mitigate the risks of AI trained on personal data:

- Mandate watermarks and proper attribution for AI-generated content. This would help to prevent the misuse of AI content for malicious purposes, such as spreading disinformation or creating deep fakes.

- Develop centralized databases to detect deep fakes and manipulated media. This would help to protect people from the harmful effects of deep fakes and other forms of manipulated media.

- Require consent for personal data usage in model training. This would ensure that people have a choice in how their data is used, and that they are fully informed of the risks and benefits before consenting.

- Institute age verification mechanisms to restrict children's access to potentially harmful AI imagery. This would help to protect children from the harmful effects of AI imagery that is violent, sexually suggestive, or otherwise inappropriate for their age.

By implementing these and other regulations, we can help to ensure that AI trained on personal data is used in a safe, ethical, and responsible manner.

What is your exposure and responsibility?

AI literacy is essential for creating AI that serves broad societal interests rather than a select few. Equipping users with the knowledge and tools they need to make informed decisions about AI is critical so we can help to ensure that AI is used for good. Additionally, it is also crucial to train AI models with quality and diverse data to get the undeniable benefits of AI. There are significant risks associated with its use, particularly when it comes to the use of personal data.That's why it's so important to educate the broader public on how their data is used to train AI models. As policymakers and technologists, we have a responsibility to communicate transparently about AI and empower people to make informed choices about data sharing.

Comprehensive educational initiatives can help users understand the basics of AI, its potential benefits and risks, and their rights and responsibilities. This knowledge can empower users to advocate for themselves and properly use policies meant to address their preferences. By promoting AI literacy and transparency, we can allow people to participate meaningfully in shaping the development of technologies reliant on their data.

Lastly, the advent of AI image generation represents a truly revolutionary moment, providing creators with boundless new capabilities while also raising critical ethical questions. As this technology proliferates, we must continue pushing for responsible governance, safety mechanisms, and transparency around data practices. But if guided by ethical principles and a commitment to public education, AI image creation offers immense potential to expand creativity, personalized services, and visual communication. While risks remain, the overwhelmingly positive applications across industries and enriched access for non-artists demonstrate the profound value of this emerging field. With cautious optimism and collective oversight, we can reshape the landscape of creativity for the better through these groundbreaking AI systems.