Everyone wants to be in an evidence-driven team, using research and data to drive their product decisions. We all know it’s the best way to work, but what does it mean in practice? How can you tell if your team is truly evidence-driven? And if it’s not, how do you change things for the better?

Teams may think they’re evidence-driven because they track metrics, run experiments, or state goals in terms of outcomes rather than outputs. These are great practices, but it takes a more cohesive approach and culture to really unlock value. Knowing where your team starts from can help you to identify weaker areas, and to start the conversations that help to move your team towards being evidence-driven.

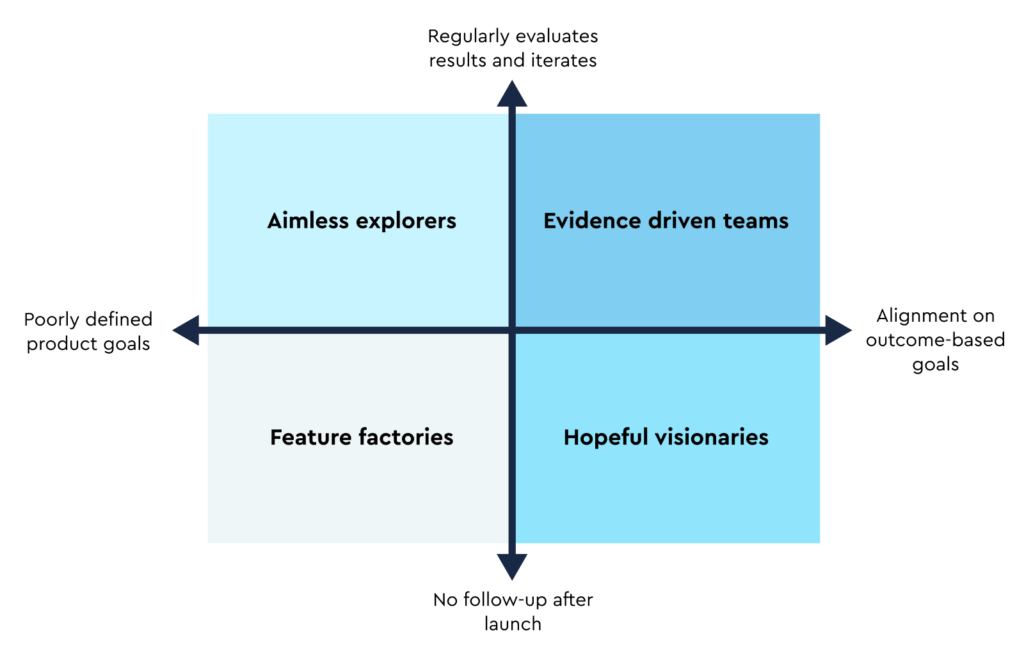

The metrics maturity landscape

After working with and talking to many product teams, I started to realise that the teams who used metrics most successfully to transform their business have two characteristics: they set clear desired outcomes and goals upfront, and they follow up on the results of their builds to see if they have worked and achieve the desired outcomes. This may seem like common sense, but in reality, most teams struggle in one or both of these practices.

When you look at how teams behave in these areas, you start to see patterns in the way they operate. Let’s take a look at four rough groupings, and see where their use (or lack) of goals and follow-up affects their success, and where they might start if they want to make a change for the better.

Feature factories

John Cutler coined the term “feature factory” to describe teams who prioritize building above all else. These teams may have some goals, but they are more likely to describe a feature to be built rather than a measure that will drive value. Their roadmaps are filled with lists of features to be completed, and everyone knows at the beginning of an effort what the end result will look like. There is little space for testing or iteration, and once a feature is delivered, it is considered “done” and the team moves on.

Because the goals are generally more delivery-focused (on time and on budget), there is little reason to go back and evaluate past projects to see how they performed. The measure of success is “Did we ship the features?” rather than “Did the features achieve the outcomes we hoped for?”.

Combating the feature factory

A feature factory is usually set up by people in other parts of the organisation who have little incentive to change the structure, and this makes it difficult to fight. Things often work well enough that the lack of connection between building and results can be glossed over, and the people who manage the budget get to choose what gets built. The features chosen regularly come from conversations that the factory gatekeepers have had with customers, and moving to a model where the team doesn’t simply build what the customer has asked for will (at least temporarily) make their jobs harder. So what can you do?

As with many challenges in product, start with the goals. Work with your stakeholders to define your product vision, strategy, and goals (or if they’re already defined, ensure everyone is aligned around them) and make sure the full decision stack is in place. Start to push conversations about the features that make it onto “the list” and ask why each one is there. Dig deeper into the real problem that is being solved by each feature request, and make it a regular practice to try to identify other ways to achieve the same outcome. This won’t be easy, and you will likely get pushback. But once you can get people into the habit of articulating what they’re trying to achieve in building a feature, you can start to guide them towards the practices of measuring results and iterating before moving on to the next feature.

Aimless explorers

Aimless explorers look like they have things figured out. They have bought into the “build-measure-learn” cycle, and test constantly. Every aspect of their product is set up for analytics and data gathering, and you will see numbers and metrics presented in every conversation. These teams may even choose their experiments based on good qualitative research, identifying pain points from user research and working toward solutions to add value for their customers.

This can give the illusion of being evidence-driven, but the focus of the testing doesn’t really align with a solid product goal. The measure of success is “Did we move the numbers?” rather than “Did the numbers we moved matter?”

Bringing direction to the aimless explorers

The good news for aimless explorers is you have already tackled the concepts that most teams struggle with – that you shouldn’t rely on your gut, and there are better ways to learn than building a full product or feature and seeing what happens. But you won’t truly unlock the value of being evidence-driven until you connect your experimentation with clear, defined product goals that focus the team’s energy in an intentional direction. Saying “Our goal is to grow” isn’t enough – try to get more specific with the segments you want to grow first, or the areas of the product that you want to improve to build a competitive advantage, or any other set of aims that will help you to narrow down the opportunity space. This will help your exploration to have more impact.

It may seem like common sense, but aimless explorers need to find focus and direction. A good place to start for aimless explorers is Teresa Torres’ Opportunity Solution Tree. This provides a framework to help your team to evaluate the goals you’re trying to achieve, and work through opportunities before you spend a lot of effort in testing.

Hopeful visionaries

Take a feature factory, add a business plan, and you’ve got a hopeful visionary. These teams create well-defined goals and outcomes to drive towards, and often are great at the “business case” for a feature they want to build. They create hypotheses on how to achieve their outcomes, and define features to build based on their goals. Hopeful visionaries thrive in an environment with long planning cycles and budgets that get approved by executives (typically on an annual basis), because by the next time they request funding, no one remembers what they promised the year before.

But shortly after the launch of a feature, they lose sight of the metrics and don’t follow through to see if the outcomes are being delivered, and move on to the next thing. The measure of success is “Did we focus on the goals at kick-off?” rather than “Are we still focused on goals after launch?”.

A dose of reality for the hopeful visionaries

It can be difficult to break the hopeful visionary cycle, because often it arises as a result of their environment. Their funding is dependent on bigger visions, and wild promises that this feature will bring in millions, if only they’re given the chance to build it. And because there is no follow-up of any consequence, there is no incentive to make sure the plans are based in reality.

The simplest way to change this is from the top: change the way you fund development. Fund teams and give them outcomes to achieve, rather than funding projects and checking if the features have been built. But even if you aren’t an executive who can change the way the company operates, you can make progress within your team. Start presenting metrics on everything you build, even if (or maybe especially if) the numbers don’t show a wild success. Place those numbers next to what was presented in the business case. It doesn’t take many cycles of “We said this feature would bring in $250,000 in new revenue…it has brought in $10,000” or “We were promised a partnership with this company would drive 500,000 new users…they’ve brought us 20” before stakeholders start to temper their claims when trying to get a feature built. (Are those real examples from my career? I can neither confirm nor deny. 😉)

The scary thing here is, you also have to hold yourself accountable. It can be fun to be a product manager in a hopeful visionary environment, because you also get to build things you want with a very light justification. But in doing this, you’re not driving your product to success. So be prepared to admit something that you thought was going to be great wasn’t great in the end. Then you can start to introduce modern discovery practices to find a better path.

Evidence-driven teams

These are the teams that we desire to be. Evidence-driven teams at their core are very focused on outcomes. They use their business goals to drive their metrics, and use their metrics to drive their priorities. They live in a productive “build-measure-learn” cycle, and don’t stop measuring once the “v1” or “MVP” of a feature is released. These teams also understand that every idea they test will not be a winner, and they are prepared to learn from failure.

Alignment across the organisation is key to building an evidence-driven team. A shared understanding of goals and purpose truly allows the leaders to empower their teams, knowing they’re making decisions based on solid information, not wild guesses. Their measures of success are both “Are our priorities in line with our goals?” AND “Are we seeing results with what we’re building?”

The responsibilities of evidence-driven teams

While they may have figured out the use of metrics to drive business value, it can be dangerous if an evidence-driven team becomes myopic, and loses sight of the broader implications of their products. Whether we like it or not, our products exist in an ecosystem, and we have a responsibility to society to consider the potential impact of the things we build. Regardless of where your organisation sits in the Metrics Maturity Landscape, it is important to consider the unintended consequences or potential for harm of the things we build. This is especially important for evidence-driven teams as they have figured out how to get the most traction out of the things they build.

Where does your team sit today?

It is important to note that while these categories fit into boxes, most teams and people don’t. It is completely possible for a team to be in multiple categories across different projects or areas of the product. This is especially true in learning teams where you’re trying different ways of working, and in teams that are evolving their practices. But it can be helpful to really evaluate where your team sits most of the time. Don’t just cherry-pick the one effort that you ran well and think you’ve got it all figured out. In the same vein, if you’ve made real progress, don’t let that one feature – that you know you just built on gut instinct because you needed to move forward – make you think your team is back at the beginning. Think about your team’s default position when using metrics, and use that as a catalyst for a conversation about what you can try next to move to a more evidence-driven world.

Discover more content on Product Management Metrics and KPIs or use our Content A-Z to find even more product management content.

Comments

Join the community

Sign up for free to share your thoughts